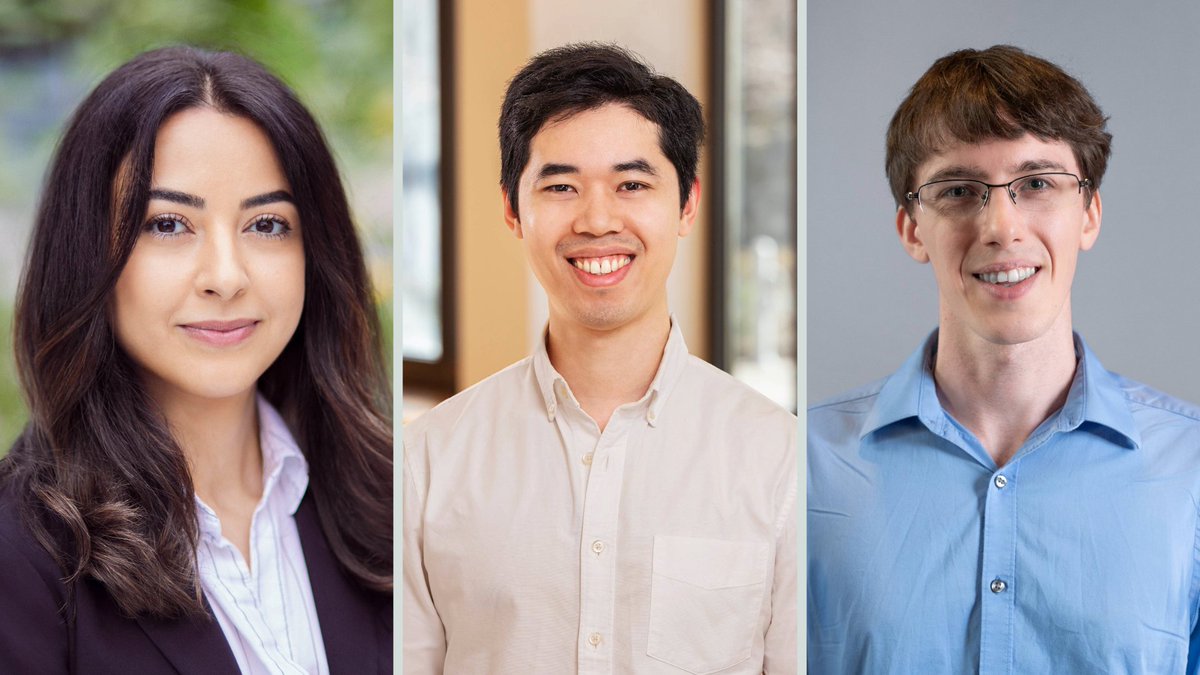

Tri Dao

@tri_dao

Asst. Prof @PrincetonCS, Chief Scientist @togethercompute. Machine learning & systems.

ID: 568879807

https://tridao.me 02-05-2012 07:13:50

795 Tweet

28,28K Followers

602 Following

Now live. A new update to our Jamba open model family 🎉 Same hybrid SSM-Transformer architecture, 256K context window, efficiency gains & open weights. Now with improved grounding & instruction following. Try it on AI21 Studio or download from Hugging Face 🤗 More on what