Tris Warkentin

@triswarkentin

Tech builder, Google DeepMind Product Management Director, and all-around happy guy. Launched Gemma, Bard, Imagen, and many other neat AI things.

ID: 111053528

03-02-2010 17:12:46

110 Tweet

938 Followers

128 Following

Thank you to Google DeepMind for letting us be a part of their Gemma Dev Day. 🙌 ICYMI: Gemma 3 is a family of lightweight models with multimodal and multilingual capability that are ready to run on all your NVIDIA hardware. Our very own Asier Arranz demoed how to run Gemma on

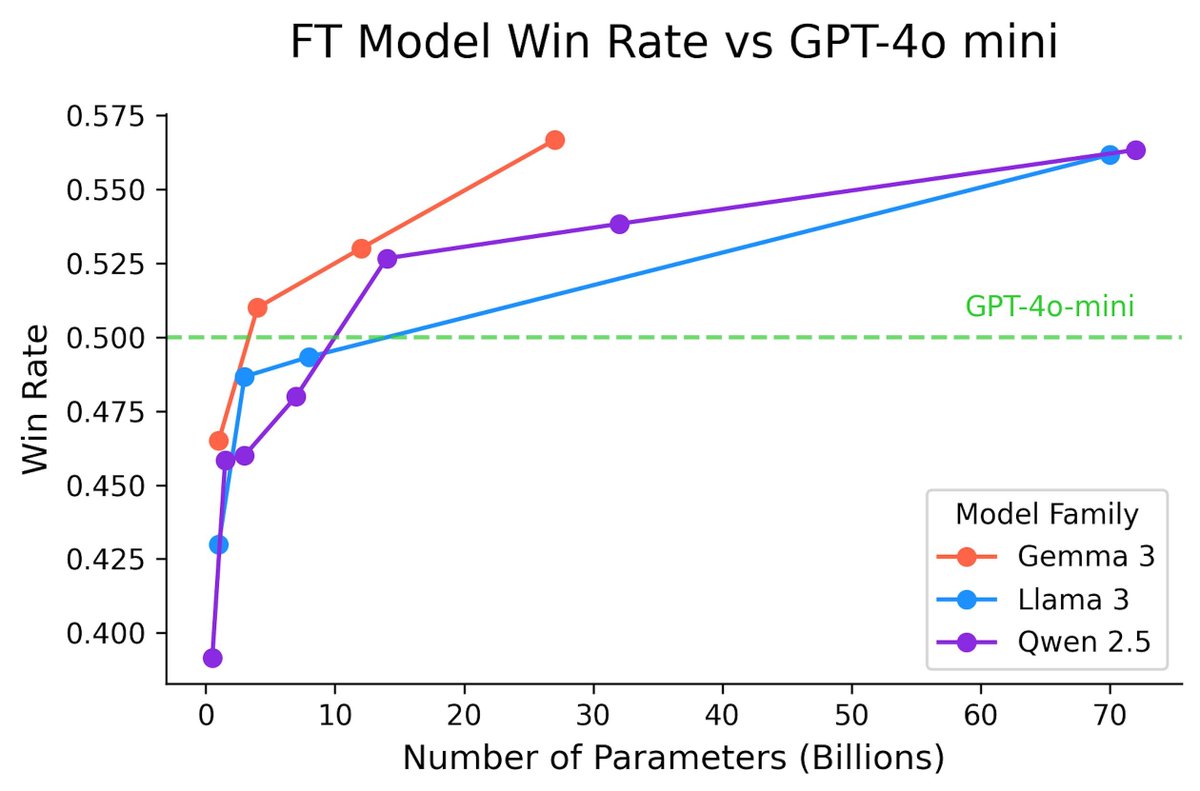

New lmarena.ai leaderboard update includes new Gemma 3 sizes: 👀 - Gemma-3-27B (1341) ~ Qwen3-235B-A22B (1342) - Gemma-3-12B (1321) ~ DeepSeek-V3-685B-37B (1318) - Gemma-3-4B (1272) ~ Llama-4-Maverick-17B-128E (1270) The Google DeepMind Gemma team cooked. 🧑🍳 Time to work on