tsvetshop

@tsvetshop

Group account for Prof. Yulia Tsvetkov's lab at @uwnlp. We work on low-resource, multilingual, social-oriented NLP. Details on our website:

ID: 1376970544390750214

http://tsvetshop.github.io 30-03-2021 18:54:04

111 Tweet

909 Followers

133 Following

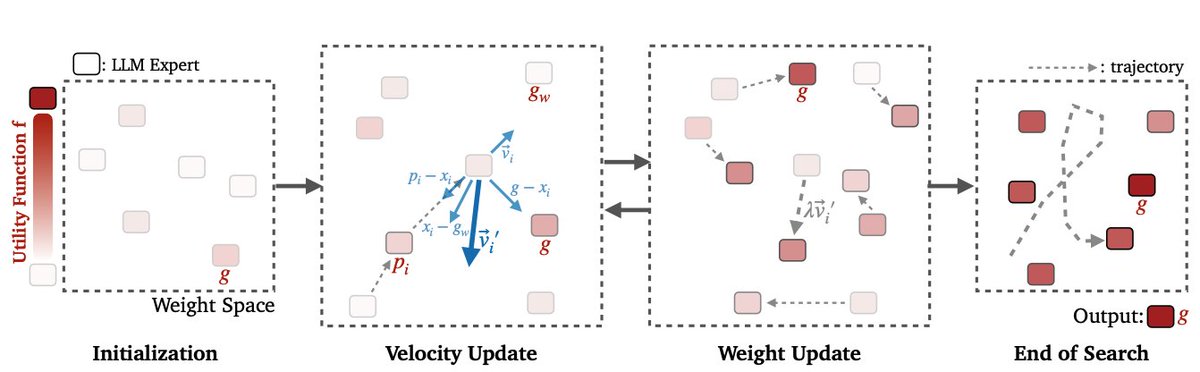

Accepted to COLM! 🎉 Proxy-tuning achieves finetuning, but at decoding-time! Enables easy customization, adapting to private data, and tuning an arbitrary # of models for the cost of training one proxy. Super excited to be a part of the first Conference on Language Modeling 😀

Thank you for the double awards! #ACL2024 ACL 2025 Area Chair Award, QA track Outstanding Paper Award huge thanks to collaborators!!! Weijia Shi @YikeeeWang Wenxuan Ding Vidhisha Balachandran tsvetshop

Huge congrats to Oreva Ahia and Shangbin Feng for winning awards at #ACL2024! DialectBench Best Social Impact Paper Award arxiv.org/abs/2403.11009 Don't Hallucinate, Abstain Area Chair Award, QA track & Outstanding Paper Award arxiv.org/abs/2402.00367

🚨Curious how LLMs deal with uncertainty? In our new #EMNLP2024 Findings paper, we dive deep into their ability to abstain from answering when given insufficient or incorrect context in science questions 💡arxiv.org/pdf/2404.12452 Joint work w/ Bill Howe Lucy Lu Wang UW iSchool

Thank you Leah and Emma for this important call for action Emma Pierson nature.com/articles/d4158…

Congratulations to our amazingly talented lab member Shangbin Feng on getting 3 PhD fellowship award just in the past few weeks 🎉🎉🎉 thank you so much to IBM, Jane Street, and Baidu. Very well deserved, Shangbin! 🎉❤️💪