Tu Vu

@tuvllms

Research Scientist @GoogleDeepMind & Assistant Professor @VT_CS. PhD from @UMass_NLP. Google FLAMe/FreshLLMs/Flan-T5 Collection/SPoT #NLProc

ID: 850104281197928454

https://tuvllms.github.io 06-04-2017 21:53:49

1,1K Tweet

3,3K Followers

939 Following

We’re excited to introduce Text-to-LoRA: a Hypernetwork that generates task-specific LLM adapters (LoRAs) based on a text description of the task. Catch our presentation at #ICML2025! Paper: arxiv.org/abs/2506.06105 Code: github.com/SakanaAI/Text-… Biological systems are capable of

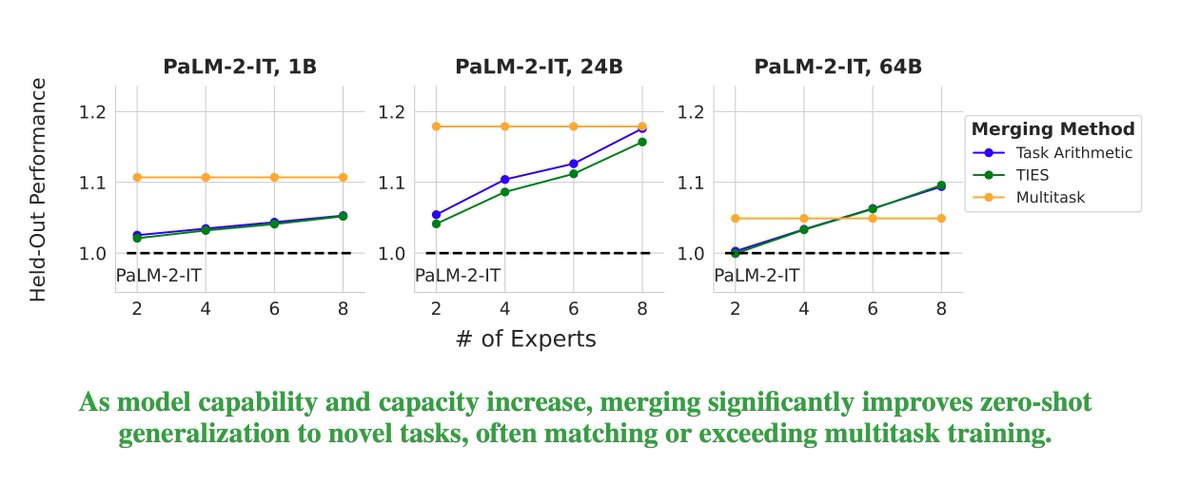

Excited to share that our paper on model merging at scale has been accepted to Transactions on Machine Learning Research (TMLR). Huge congrats to my intern Prateek Yadav and our awesome co-authors Jonathan Lai, Alexandra Chronopoulou, Manaal Faruqui, Mohit Bansal, and Tsendsuren 🎉!!