Udaya Ghai

@udayaghai

Applied Scientist @Amazon, PhD in Machine Learning @PrincetonCS

ID: 1524085579918491649

https://udayaghai.com 10-05-2022 17:55:25

28 Tweet

123 Followers

572 Following

Together with Elad Hazan, Cong, Andrea Zanette, and Nati, we are organizing a long program on reinforcement learning and control at Instit. for Mathematical & Statistical Innovation! Join us for workshops on frontiers of online/offline RL, control, multi-agent RL, and opportunities to present your research.

Akshay Krishnamurthy and Audrey Huang (Audrey Huang) have written a nice blog post on the intersection of reinforcement learning theory and language model post-training. let-all.com/blog/2025/03/0…

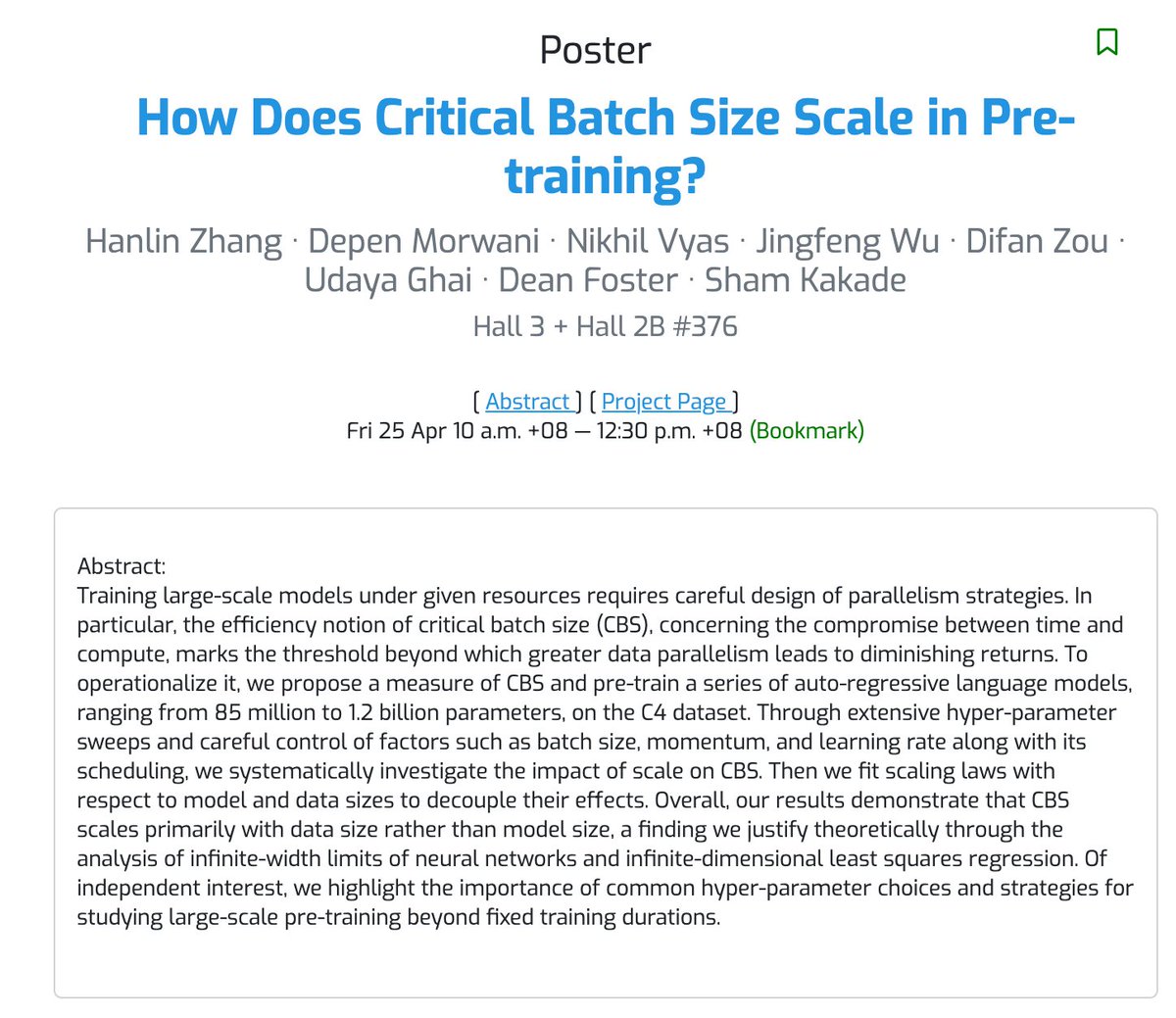

Hanlin Zhang John J. Vastola Marinka Zitnik Naomi Saphra hiring PhD students 🧈🪰 Zechen Zhang 4/25 at 10am: 'How Does Critical Batch Size Scale in Pre-training?' Hanlin Zhang · Depen Morwani · Nikhil Vyas · Jingfeng Wu · Difan Zou · Udaya Ghai · Dean Foster · Sham Kakade Submission: openreview.net/forum?id=JCiF0…

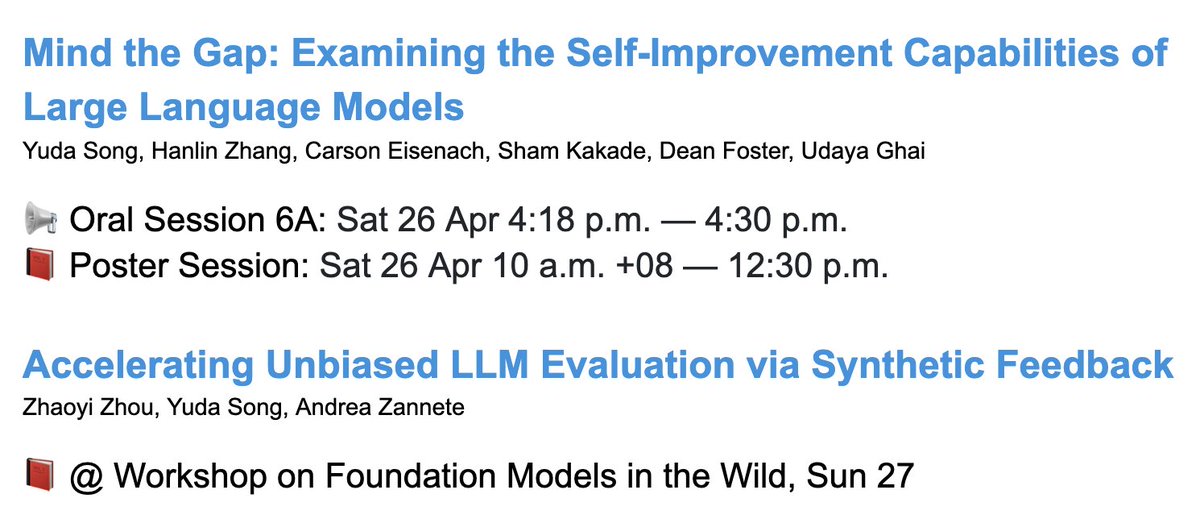

4/26 at 10am: 'Mind the Gap: Examining the Self-Improvement Capabilities of Large Language Models' Yuda Song · Hanlin Zhang · Carson Eisenach · Sham Kakade · Dean Foster · Udaya Ghai Submission: openreview.net/forum?id=mtJSM…

Still noodling on this, but the generation-verification gap proposed by Yuda Song Hanlin Zhang Sham Kakade Udaya Ghai et al. in arxiv.org/abs/2412.02674 is a very nice framework that unifies a lot of thoughts around self-improvement/verification/bootstrapping reasoning

Discussing "Mind the Gap" tonight at Haize Labs's NYC AI Reading Group with Leonard Tang and will brown. Authors study self-improvement through the "Generation-Verification Gap" (model's verification ability over its own generations) and find that this capability log scales with