UNC NLP

@uncnlp

NLP (+ML/AI/CV) research at @UNCCS @UNC

Faculty: @mohitban47+@gberta227+@snigdhac25+@shsriva+@tianlongchen4+@huaxiuyaoml+@dingmyu+@zhun_deng +@SenguptRoni et al

ID: 875914488020701188

http://nlp.cs.unc.edu 17-06-2017 03:14:22

2,2K Tweet

3,3K Followers

405 Following

🎉 Our paper, GenerationPrograms, which proposes a modular framework for attributable text generation, has been accepted to Conference on Language Modeling! GenerationPrograms produces a program that executes to text, providing an auditable trace of how the text was generated and major gains on

🥳 Gap year update: I'll be joining Ai2/University of Washington for 1 year (Sep2025-Jul2026 -> JHU Computer Science) & looking forward to working with amazing folks there, incl. Ranjay Krishna, Hanna Hajishirzi, Ali Farhadi. 🚨 I’ll also be recruiting PhD students for my group at JHU Computer Science for Fall

Jaemin Cho Ai2 University of Washington JHU Computer Science Ranjay Krishna Hanna Hajishirzi Congrats again Jaemin Cho to you and to AI2/UW! 🎉 Looking forward to your continued exciting works + collaborations there & later at JHU Computer Science (students, make sure apply to amazing Jaemin for your PhD)! 🙂

Big congrats! If you’re thinking about a PhD in multimodal reasoning, generation, and evaluation, go work with Jaemin Cho!

My talented collaborator & mentor @jaemincho will be recruiting PhD students at JHU Computer Science for Fall 2026! If you're interested in vision, language, or generative models, definitely reach out!🎓🙌

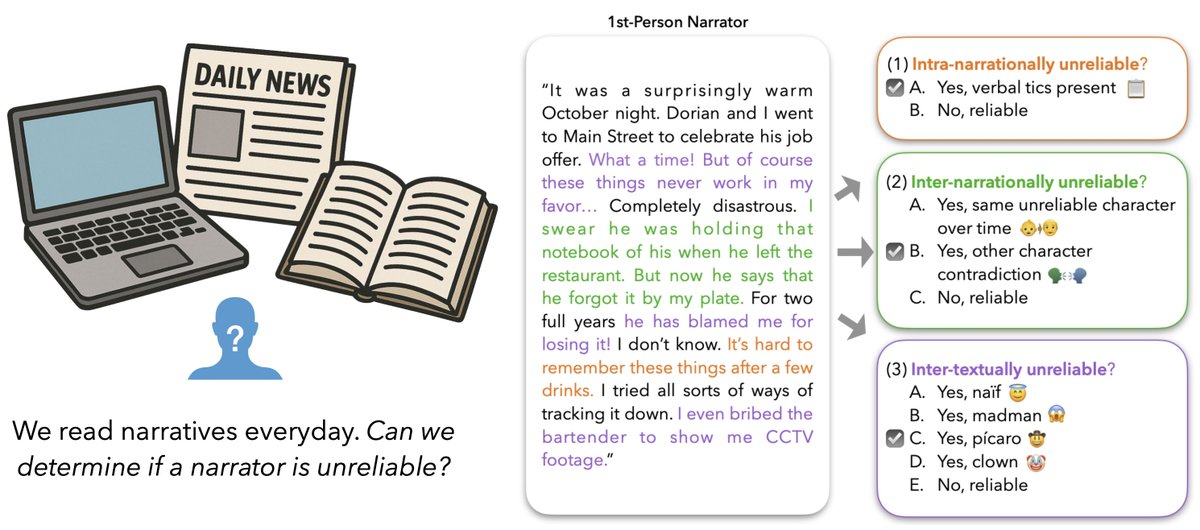

Will be attending #ACL2025. Happy to talk about the two papers being presented from our lab on (1) Identifying unreliable narrators w Anneliese Brei Shashank Srivastava (2) Improving fairness in multi-document summarization w Haoyuan Li Rui Zhang @uncnlp

🧵 MAT-Steer, led by Duy Nguyen w/ Archiki Prasad and Mohit Bansal UNC AI UNC Computer Science x.com/duynguyen772/s…

Duy Nguyen Archiki Prasad Mohit Bansal UNC AI UNC Computer Science 🧵 LAQuer, led by Eran Hirsch @ACL2025 🇦🇹 w/ Aviv Slobodkin David Wan Mohit Bansal and Ido Dagan BIU NLP UNC AI UNC Computer Science x.com/hirscheran/sta…

🚀 I'm recruiting PhD students to join my lab (jaehong31.github.io) at NTU Singapore (NTU Singapore), starting Spring 2026. If you're passionate about doing cutting-edge and high-impact research in multimodal AI, Trustworthy AI, continual learning, or video generation/reasoning,