Faisal Amir

@urmauur

UI Engineer - Making pretty things do useful stuff

ID: 256966153

http://www.urmauur.com 24-02-2011 12:49:32

14,14K Tweet

333 Followers

300 Following

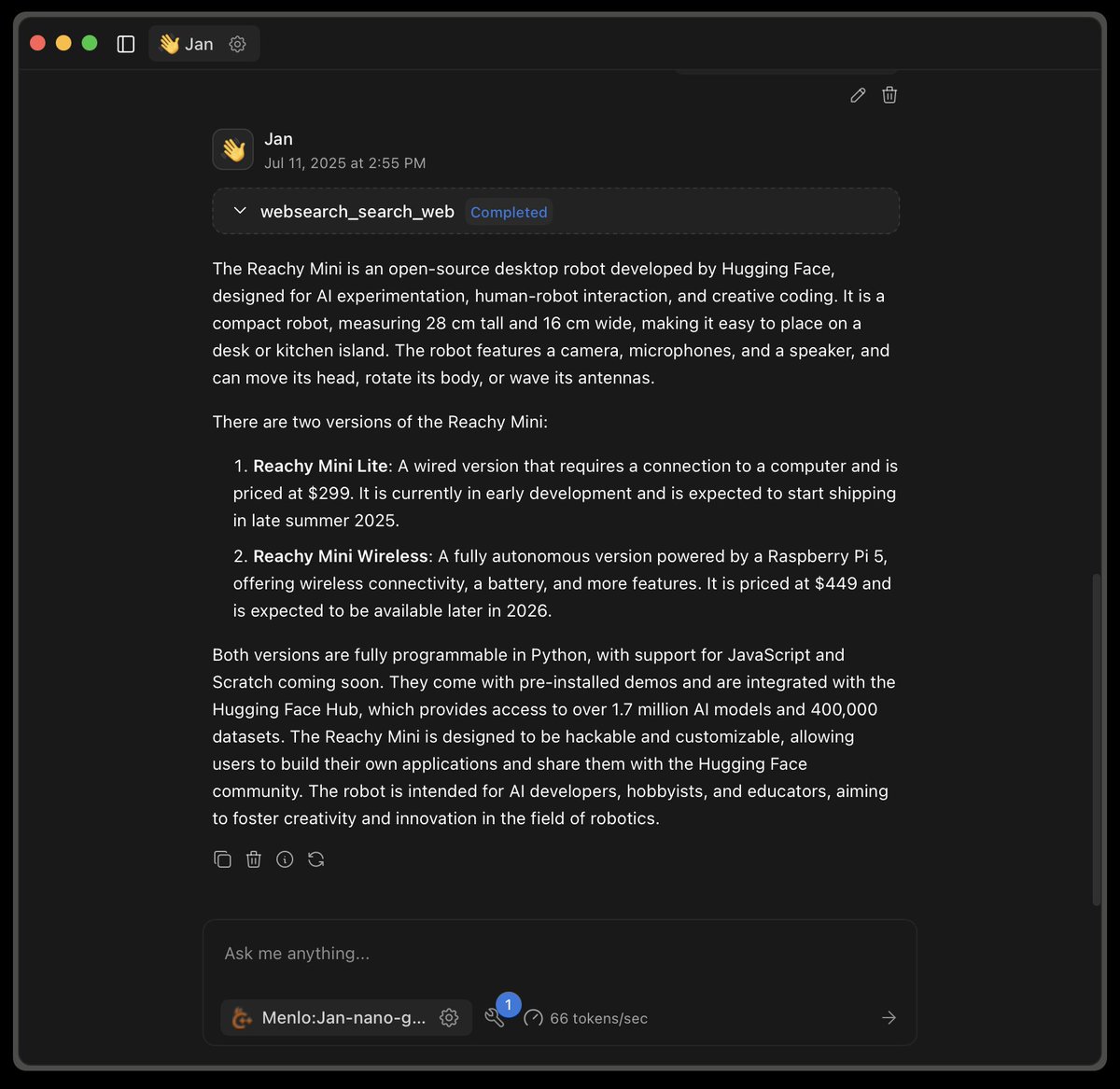

Open-source models can do Deep Research too. This video shows a full research report created by Jan-nano. To try it: - Get Jan-nano from Jan Hub - In settings, turn on MCP Servers and Serper API - Paste your Serper API key Your deep research assistant is ready.

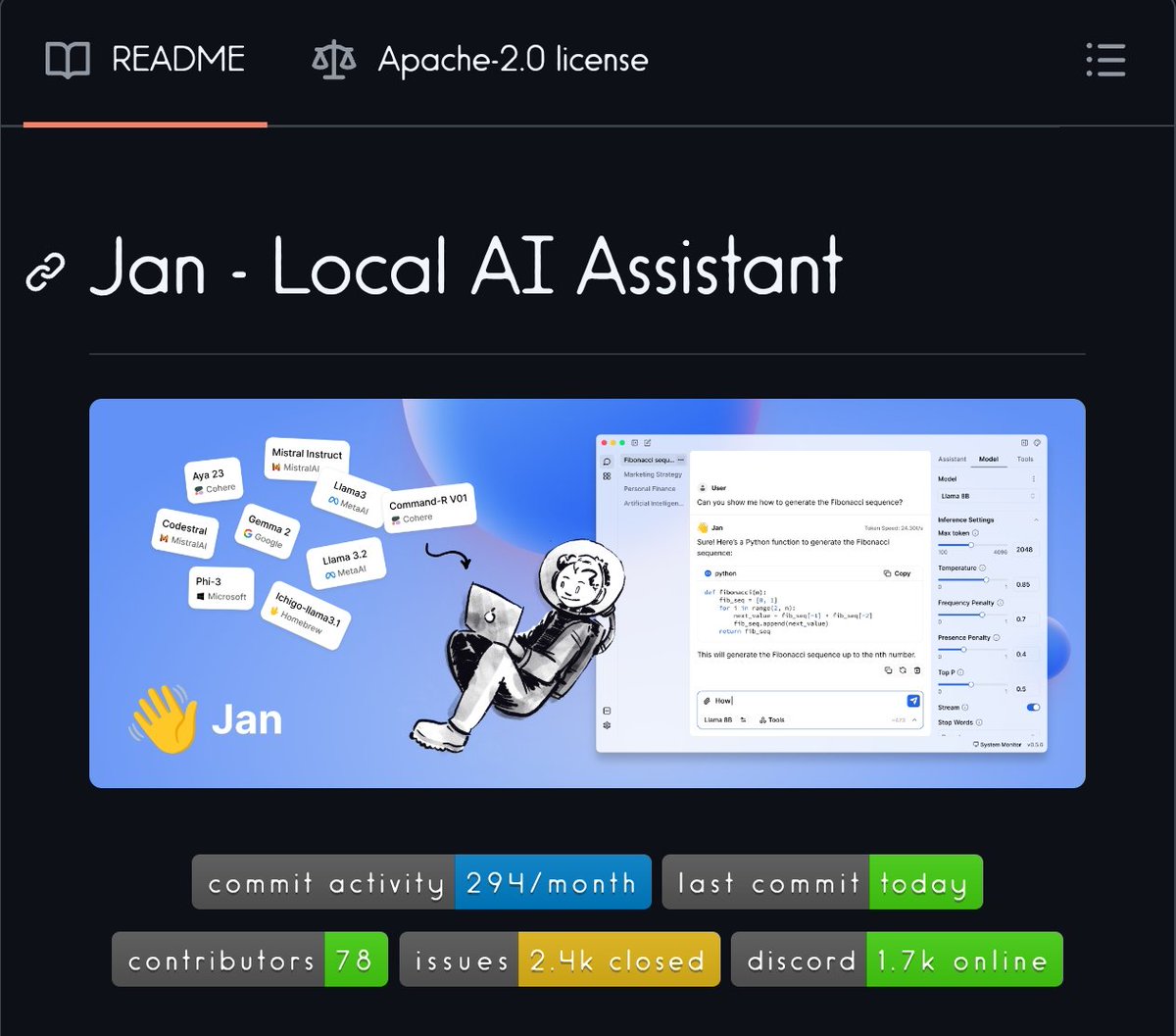

Jan v0.6.6 is out: Jan now runs fully on llama.cpp. - Cortex is gone, local models now run on Georgi Gerganov's llama.cpp - Toggle between llama.cpp builds - Hugging Face added as a model provider - Hub enhanced - Images from MCPs render inline in chat Update Jan or grab the latest.

Jan v0.6.8 is out: Jan is more stable now! - Stability fixes for model loading - Better llama.cpp: clearer errors & backend suggestions - Faster MoE models with CPU offload - Search private Hugging Face models - Custom Jinja templates Update your Jan or download the latest.