Valentina Pyatkin

@valentina__py

Postdoc at the Allen Institute for AI @allen_ai and @uwnlp | on the academic job market

ID: 786941656859811840

https://valentinapy.github.io 14-10-2016 14:48:06

636 Tweet

2,2K Followers

1,1K Following

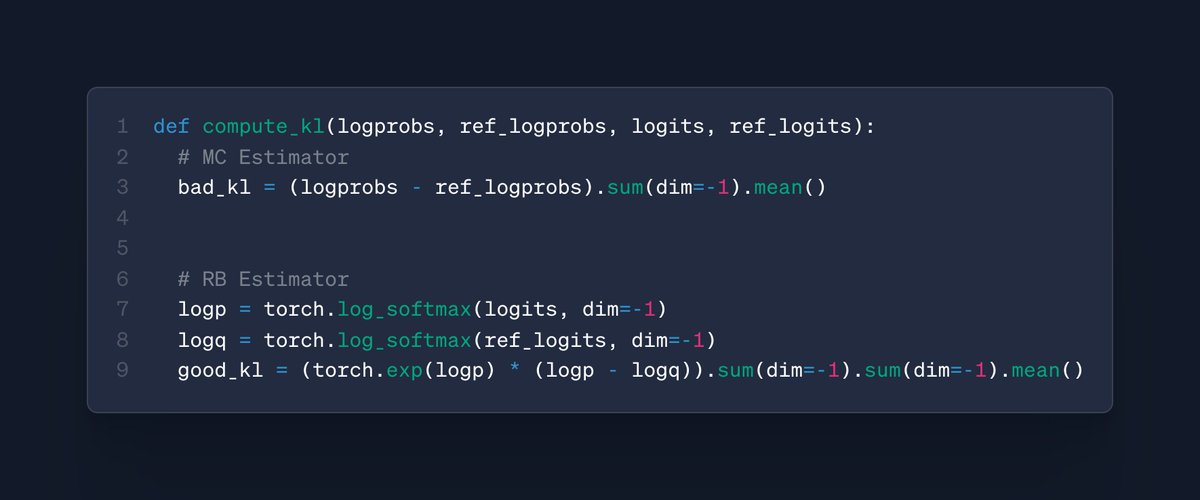

Current KL estimation practices in RLHF can generate high variance and even negative values! We propose a provably better estimator that only takes a few lines of code to implement.🧵👇 w/ Tim Vieira and Ryan Cotterell code: arxiv.org/pdf/2504.10637 paper: github.com/rycolab/kl-rb

Lucas Beyer (bl16) (((ل()(ل() 'yoav))))👾 rohan anil arxiv.org/abs/2410.15002 This is in the text-to-image domain, and we have some ideas on how to extend this to the text domain We also recently published this: arxiv.org/abs/2504.12459, which connects the number of entities co-occurrences, and "linearity" in model representations: