Valery Bugakov

@valerybugakov

🏄♂️🏂🏀 Software engineer at @sourcegraph

ID: 404265797

03-11-2011 17:37:46

504 Tweet

202 Followers

2,2K Following

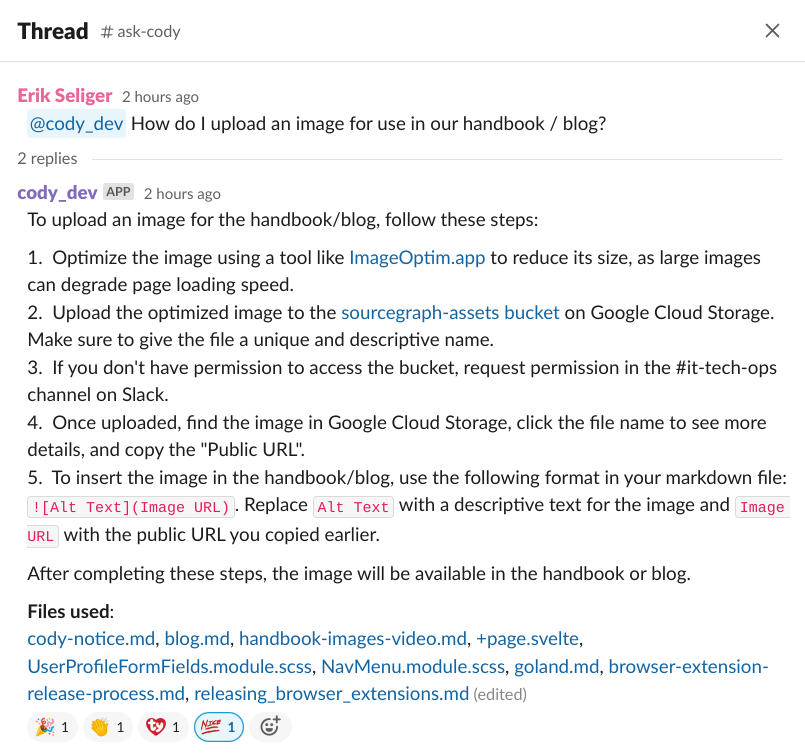

We* hacked together a version of Cody that runs as a Slackbot and answers questions about your company's docs. So far, it's proven quite handy. Would others like to try it out? *By "we" I mean Valery Bugakov, you can see his work here: sourcegraph.com/github.com/sou…

Singapore dinner with swyx @TheNamanKumar Farhan Attamimi Valery Bugakov Tim Lucas Erik Seliger Hitesh Sagtani and more cool people!

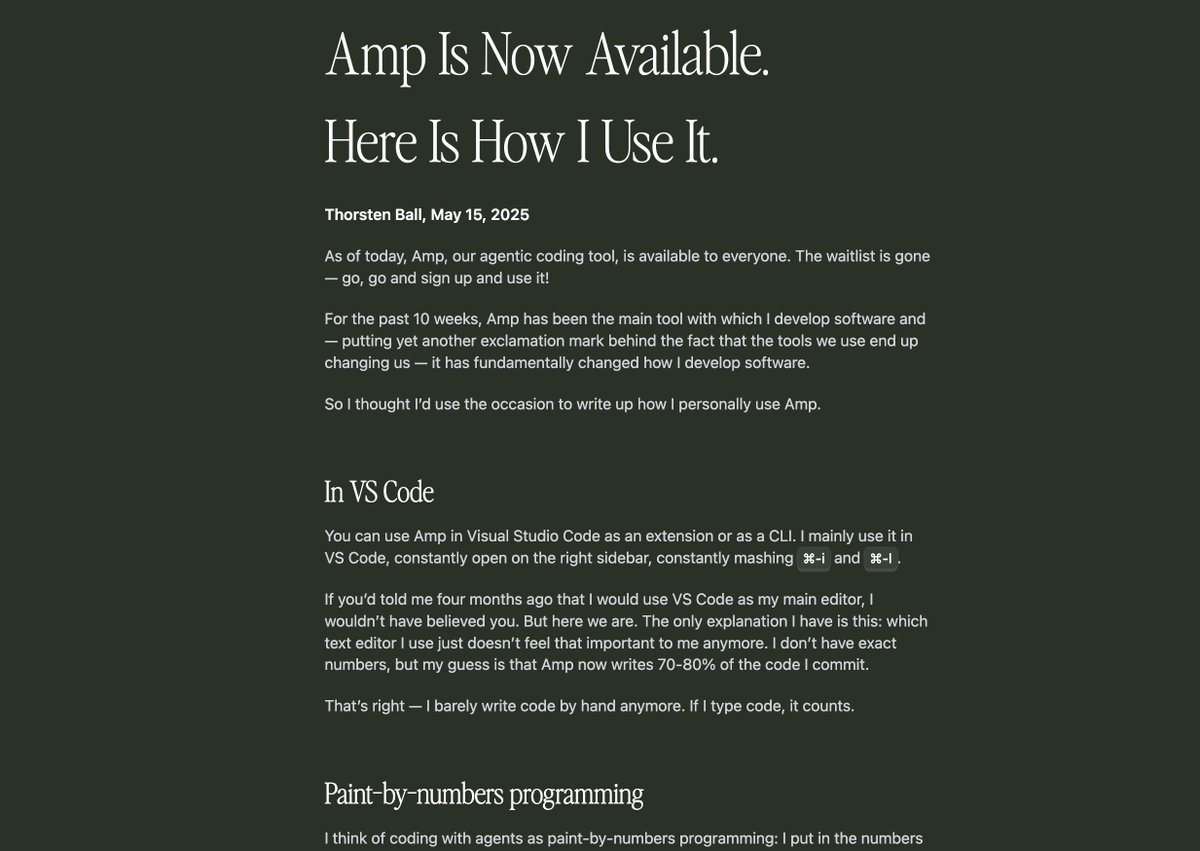

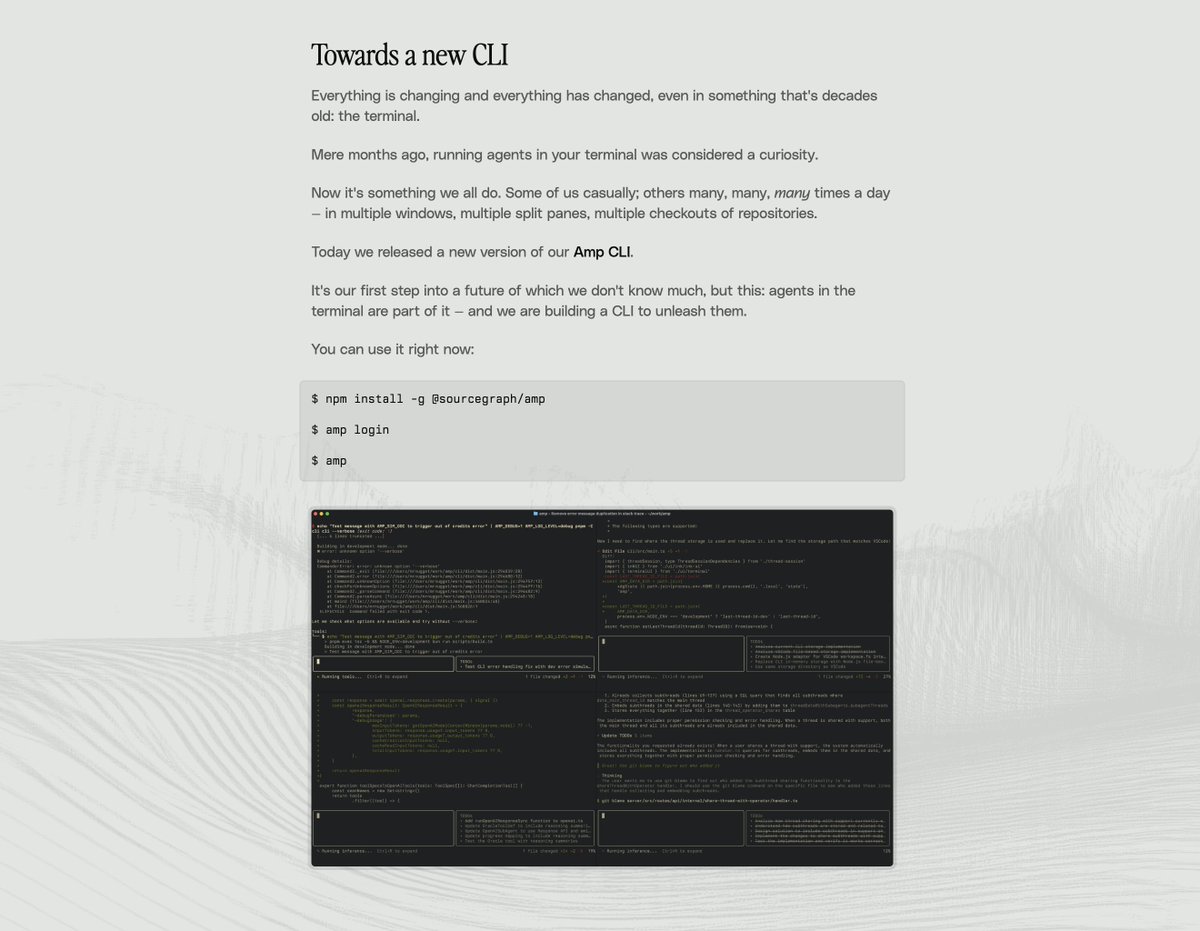

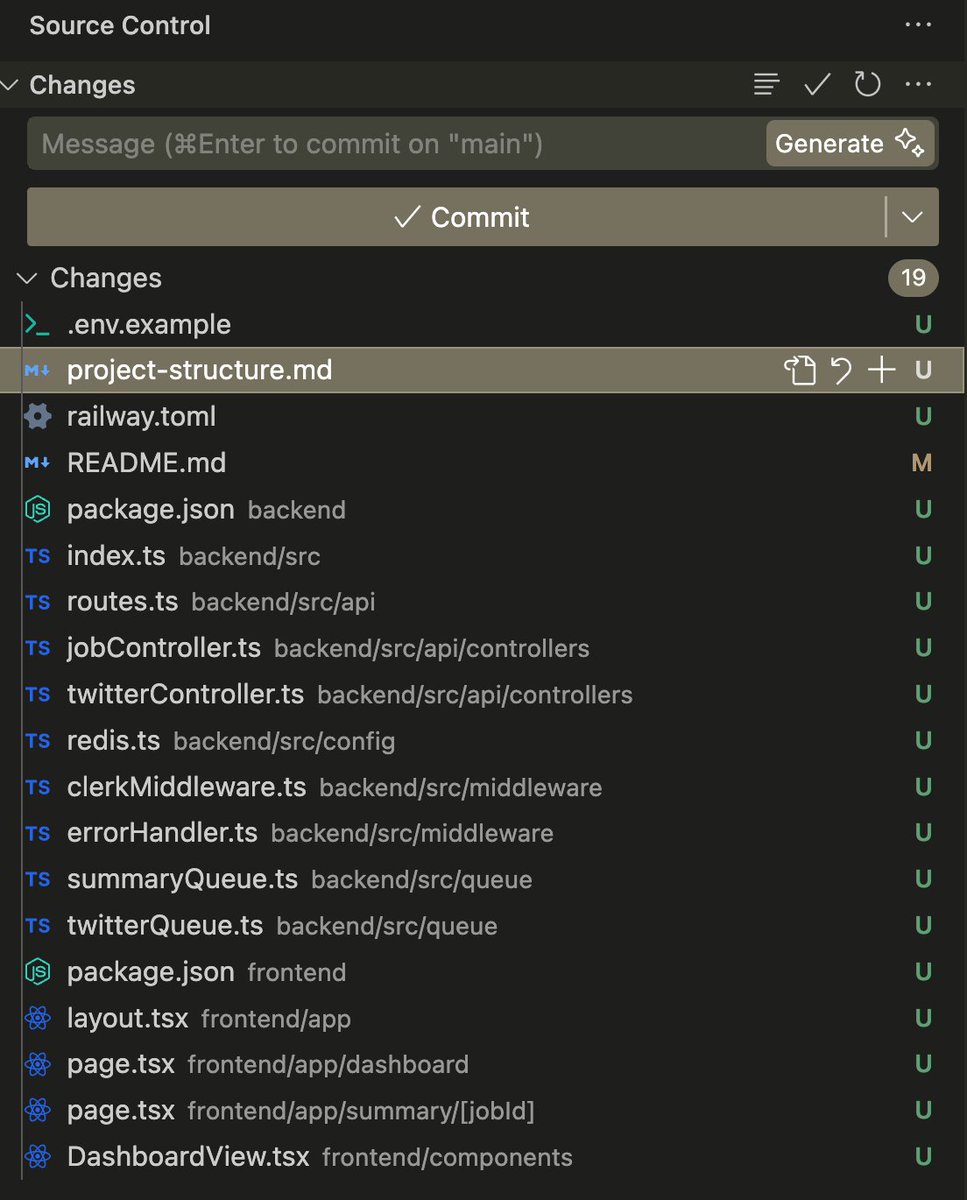

trying out Claude 4 Sonnet + Quinn Slack' new Amp — Research Preview and.... i think i just felt the agi this was the result of "turn my scripts into a multitenant @railway app with billing" 🤯

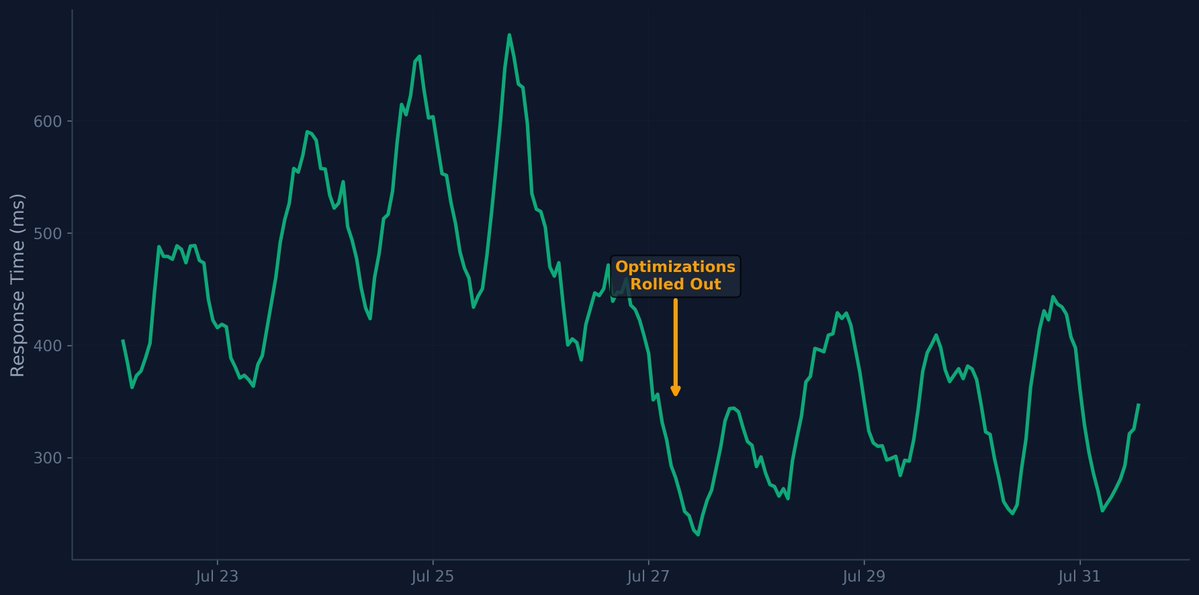

Heeeere we go! Man, I've been waiting for this. Valery Bugakov Tom Hitesh Sagtani team have done a fantastic job: it's super fast, the custom model is better, and the contextual "understanding" is better.

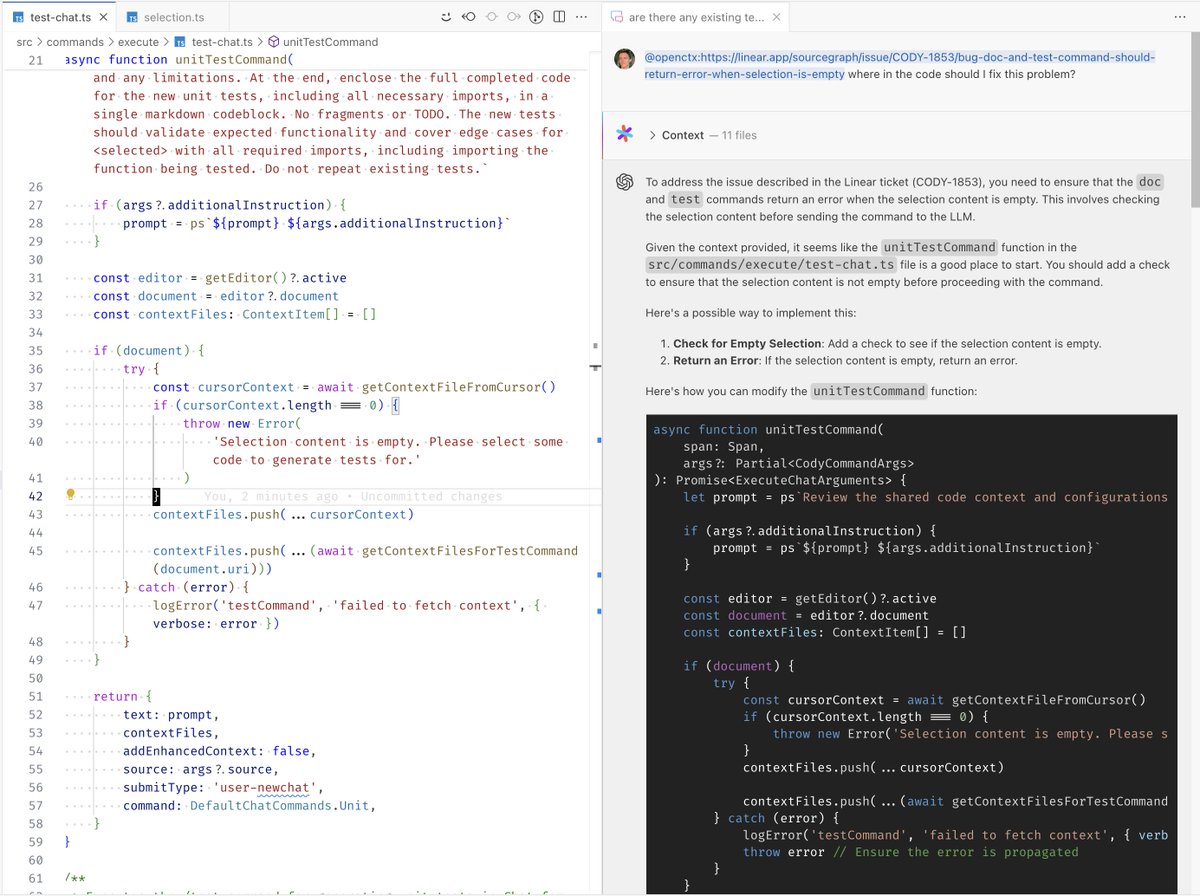

While Amp — Research Preview is great for large, agentic coding sessions… Sometimes you need to make surgical edits to the code yourself. We’ve enabled more fine grained suggestions in Amp Tab, so you can now Tab through a refactor and view each change carefully

New Amp — Research Preview feature update: Amp Tab now works in Jupyter Notebooks!