Vishruth Veerendranath

@viishruth

ML/NLP Master’s @LTIatCMU

ID: 3274985820

https://vishruth-v.github.io/ 11-07-2015 01:33:20

125 Tweet

223 Followers

803 Following

As we prepare for EMNLP 2024 (EMNLP 2025), we're thrilled to congratulate all of the LTI researchers with accepted papers at this year's conference. In all, 32 papers with LTI authors were accepted! Read about them all here: lti.cs.cmu.edu/news-and-event…

Sad to miss #EMNLP2024 but do check out our paper "ECCO: Can We Improve Model-Generated Code Efficiency Without Sacrificing Functional Correctness?" presented by Vishruth Veerendranath and Siddhant Tuesday 11-12:30 at Poster Session 02‼️

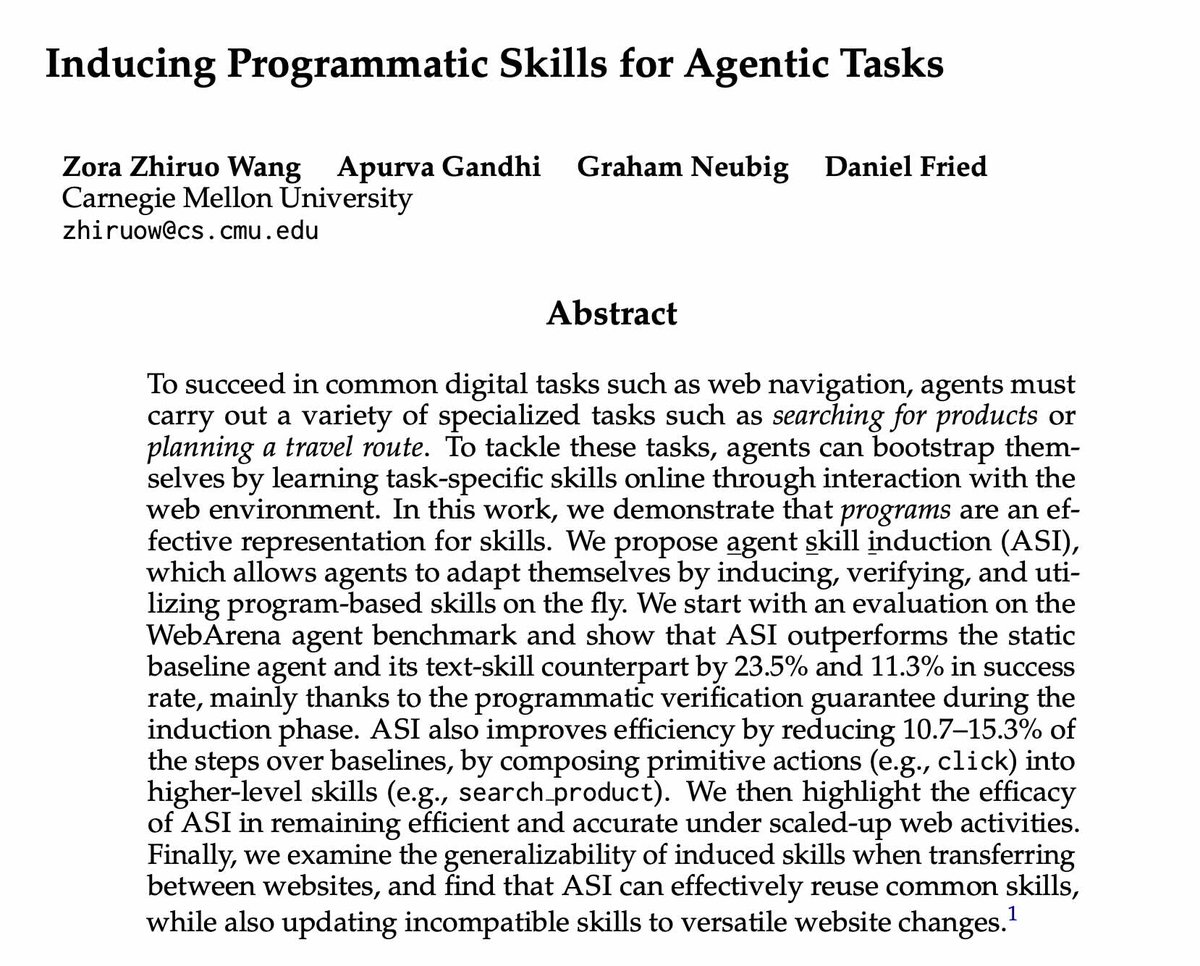

ECCO: Can We Improve Model-Generated Code Efficiency Without Sacrificing Functional Correctness? by Siddhant Waghjale, Vishruth Veerendranath, Zora Wang, and Daniel Fried Session: NLP Applications 1, Session 02, 11:00-12:30 arxiv.org/abs/2407.14044

🧵We’ve spent the last few months at DatologyAI building a state-of-the-art data curation pipeline and I’m SO excited to share our first results: we curated image-text pretraining data and massively improved CLIP model quality, training speed, and inference efficiency 🔥🔥🔥

Tired: Bringing up politics at Thanksgiving Wired: Bringing up DatologyAI’s new text curation results at Thanksgiving That’s right, we applied our data curation pipeline to text pretraining data and the results are hot enough to roast a 🦃 🧵

🚀 I am thrilled to introduce SnowflakeDB 's Arctic Embed 2.0 embedding models! 2.0 offers high-quality multilingual performance with all the greatness of our prior embedding models (MRL, Apache-2 license, great English retrieval, inference efficiency) snowflake.com/engineering-bl…🌍

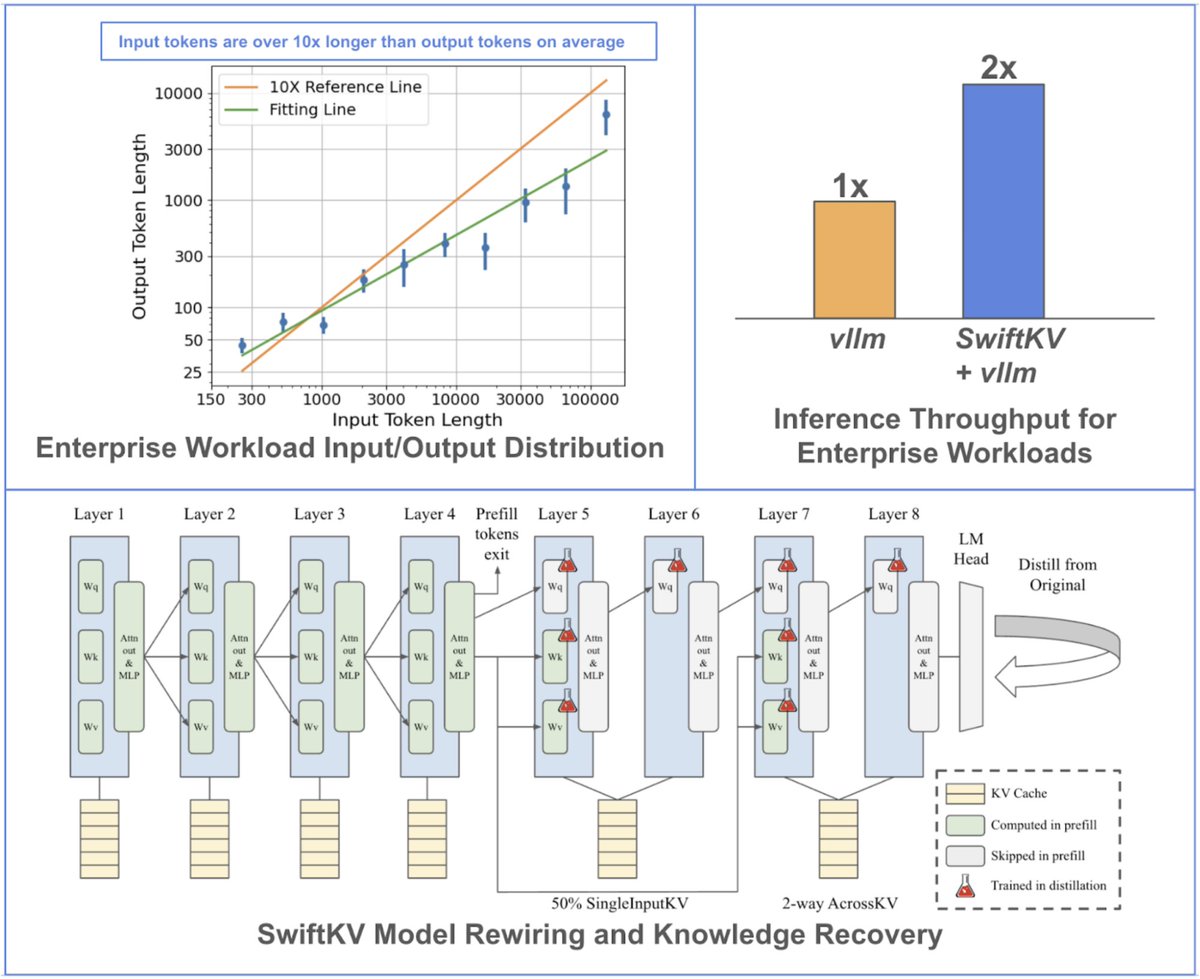

We are excited to share SwiftKV, our recent work at SnowflakeDB AI Research! SwiftKV reduces the pre-fill compute for enterprise LLM inference by up to 2x, resulting in higher serving throughput for input-heavy workloads. 🧵

Cannot attend #ICLR2025 in person (will be NAACL and Stanford soon!), but do check out 👇 ▪️Apr 27: "Exploring the Pre-conditions for Memory-Learning Agents" led by Vishruth Veerendranath and Vishwa Shah, at SSI-FM workshop ▪️Apr 28: our Deep Learning For Code @ NeurIPS'25 workshop with a fantastic line of works &