Vincent Weisser

@vincentweisser

ceo @primeintellect / decentralized agi & science

ID: 427766081

http://vincentweisser.com 03-12-2011 23:18:48

9,9K Tweet

19,19K Followers

2,2K Following

we're hiring for our engineering team Prime Intellect right now. primarily looking for builders in rust, python, typescript and more

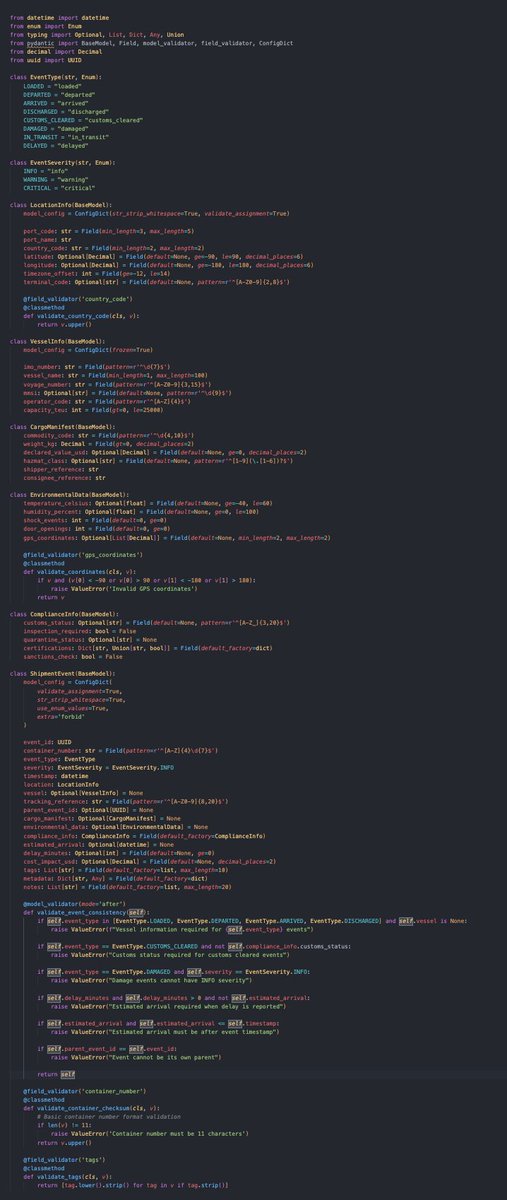

Awesome work by Mario Sieg to accelerate quantization of pseudo-gradients in decentralized training settings like DiLoCo - already integrated in pccl (prime collective communication library)

will brown cooking up some amazing async RL things! dev branch reveals async off-policy steps, which can easily give you 2-3x because of higher GPU utilization👀