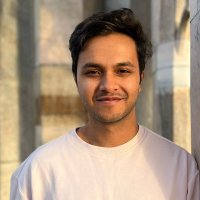

Valentin Hofmann

@vjhofmann

Postdoc @allen_ai @uwnlp | Formerly @UniofOxford @CisLMU @stanfordnlp @GoogleDeepMind

ID: 1227633169622556672

https://valentinhofmann.github.io/ 12-02-2020 16:38:56

234 Tweet

1,1K Followers

243 Following

Delighted there will finally be a workshop devoted to tokenization - a critical topic for LLMs and beyond! 🎉 Join us for the inaugural edition of TokShop at #ICML2025 ICML Conference in Vancouver this summer! 🤗

Do LLMs learn language via rules or analogies? This could be a surprise to many – models rely heavily on stored examples and draw analogies when dealing with unfamiliar words, much as humans do. Check out this new study led by Valentin Hofmann to learn how they made the discovery 💡

Excited to see our study on linguistic generalization in LLMs featured by University of Oxford News!