Vincent Abbott

@vtabbott_

Maker of *those* diagrams for deep learning algorithms | 🇦🇺 in 🇬🇧

ID: 1549633222689992706

http://www.vtabbott.io 20-07-2022 05:53:00

463 Tweet

6,6K Followers

307 Following

If you’re interested in GPU programming or 3b1b style manim videos, can confirm SzymonOzog is cracked

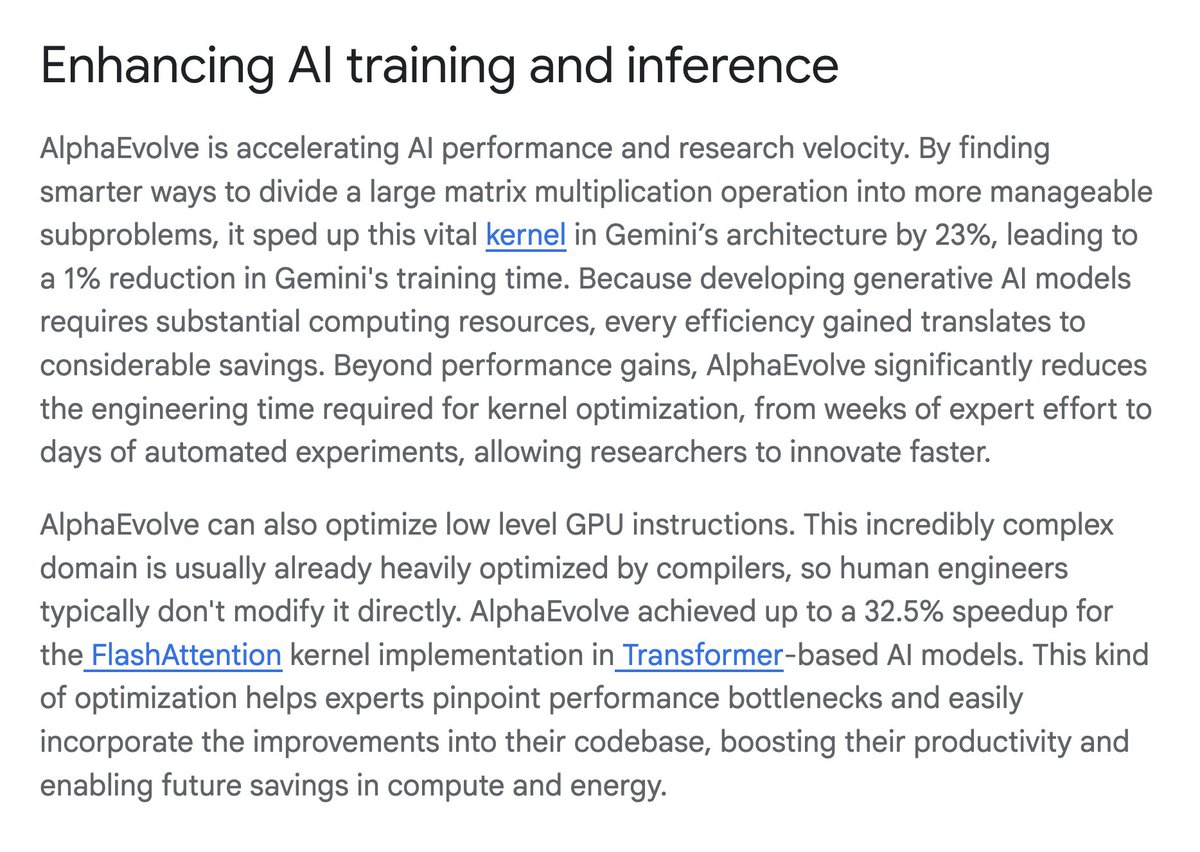

Recently posted w/ Gioele Zardini and SyntheticGestalt JP. Diagrams indicate exponents are attention’s bottleneck. We use the fusion theorems to show any normalizer works for fusion and we replace SoftMax with L2, and implement it thanks to Gerard Glowacki! Even w/o warp shuffling TC

SzymonOzog I'll be refactoring the code to allow for texture packs at some point. This is actually a good resource for style choices. The wires are drawn between anchors (shown below), so it should be straightforward to just change the "drawCurves" function.