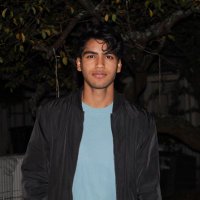

James

@wattersjames

“There are some things which cannot be learned quickly, and time, which is all we have, must be paid heavily for their acquiring.“ —Hemingway

ID: 36093693

28-04-2009 15:29:42

190 Tweet

20,20K Followers

0 Following

Mark O'Neill Yep! Platform teams are absolutely starting to trend. I see this in my work, though my view is limited compared to yours! Stream-aligned teams were arranged to facilitate fast production. But now there’s inefficiency in duplication. How do we resolve this? Platform teams!