Sean Welleck

@wellecks

Assistant Professor at CMU. Marathoner, @thesisreview.

ID: 280403336

http://wellecks.com 11-04-2011 07:59:23

1,1K Tweet

6,6K Followers

225 Following

‘Bold,’ ‘positive’ and ‘unparalleled’: Allen School Ph.D. graduates Ashish Sharma and Sewon Min recognized with ACM Doctoral Dissertation Awards news.cs.washington.edu/2025/06/04/all… Massive congrats to Ashish Sharma and Sewon Min - huge win for UW NLP and the broader NLP community! 🙌

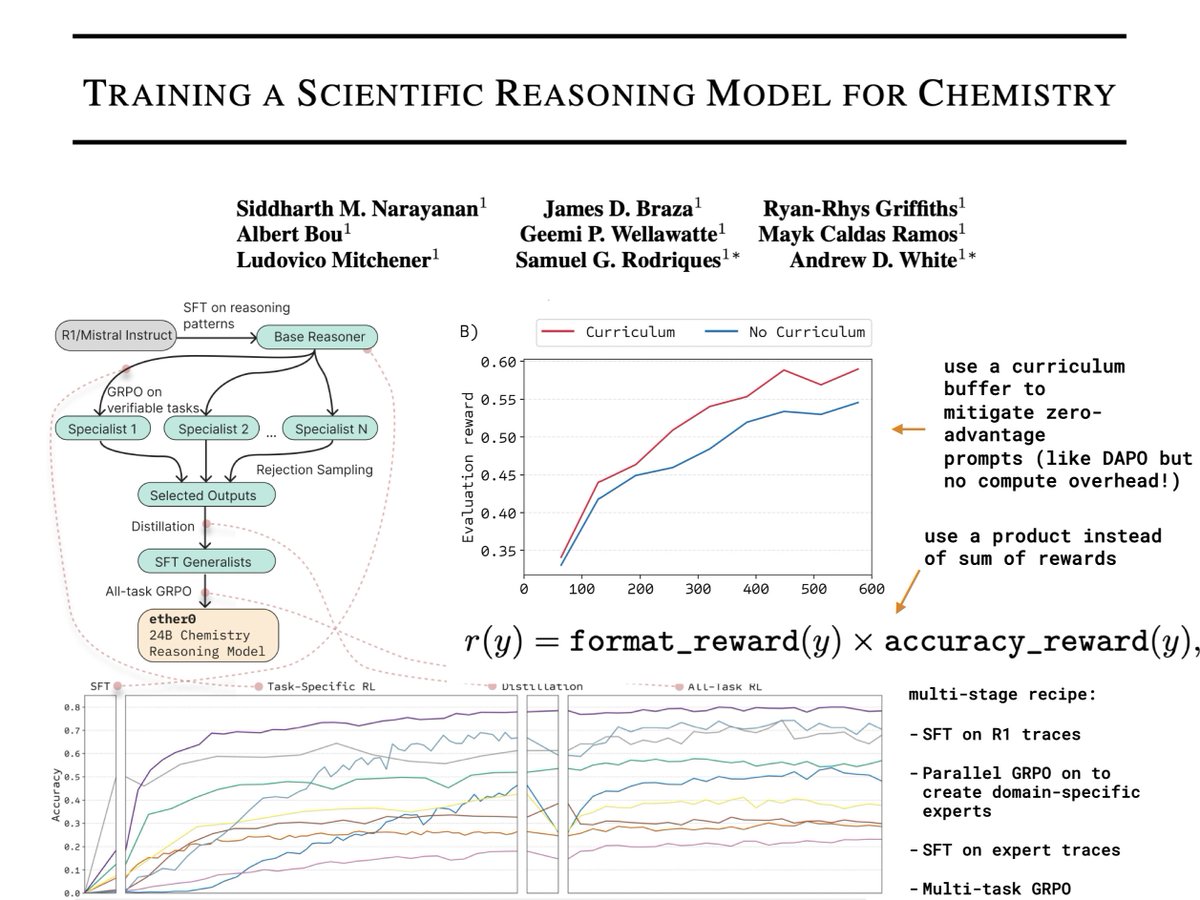

There's lots of RL goodies in the tech report behind FutureHouse's new reasoning model for chemistry 👀 Three things stood out to me: 1. Training domain-specific experts in parallel, before distilling into a generalist model. The clever thing here is that you can parallelise

In the test time scaling era, we all would love a higher throughput serving engine! Introducing Tokasaurus, a LLM inference engine for high-throughput workloads with large and small models! Led by Jordan Juravsky, in collaboration with hazyresearch and an amazing team!

🚨 Deadline for SCALR 2025 Workshop: Test‑time Scaling & Reasoning Models at COLM '25 Conference on Language Modeling is approaching!🚨 scalr-workshop.github.io 🧩 Call for short papers (4 pages, non‑archival) now open on OpenReview! Submit by June 23, 2025; notifications out July 24. Topics

![fly51fly (@fly51fly) on Twitter photo [LG] Rewarding the Unlikely: Lifting GRPO Beyond Distribution Sharpening

A He, D Fried, S Welleck [CMU] (2025)

arxiv.org/abs/2506.02355 [LG] Rewarding the Unlikely: Lifting GRPO Beyond Distribution Sharpening

A He, D Fried, S Welleck [CMU] (2025)

arxiv.org/abs/2506.02355](https://pbs.twimg.com/media/Gs4BCWuakAAJJeq.jpg)