Xavier Gonzalez

@xavierjgonzalez

PhD candidate in AI and Machine Learning at @Stanford. Advised by @scott_linderman. Parallelizing nonlinear RNNs. All views my own.

ID: 1353428973808566272

https://www.linkedin.com/in/xavier-gonzalez-517b5262/ 24-01-2021 19:49:52

75 Tweet

282 Followers

620 Following

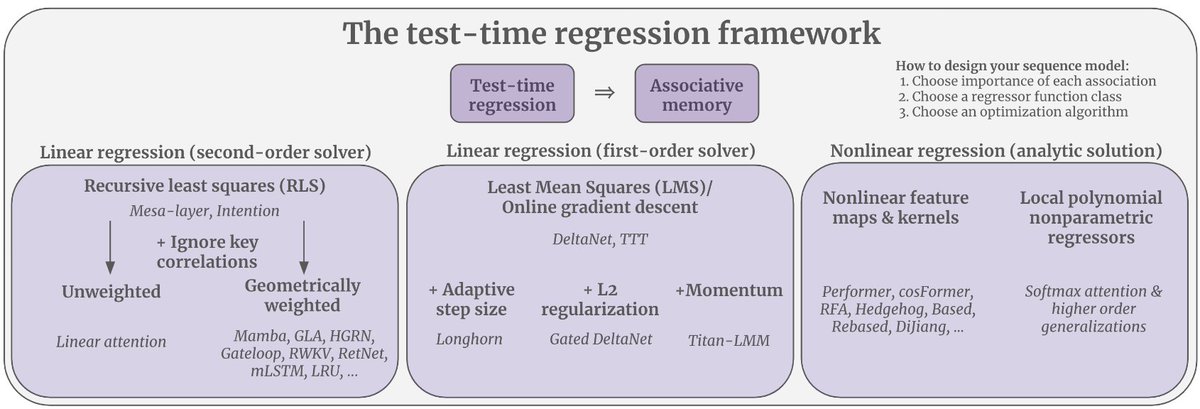

tomorrow at 10:30 pst/1:30 est i’ll be talking at the first ASAP seminar organized by Songlin Yang @ ICML '25 Simran Arora Xinyu Yang✈️ ICML 2025 Han Guo! i’ll present recent work on a unifying framework for current sequence models like mamba, attention, etc it’s all online so come thru!

This is an amazing class, a great way to learn cutting edge generative models like diffusion. And comes with a beautiful set of course notes diffusion.csail.mit.edu/docs/lecture-n… Tons of thanks to Peter Holderrieth for creating this super helpful resource!

Xavier Gonzalez NeurIPS Conference I second this call🙌

Xavier Gonzalez NeurIPS Conference Second this. No point in separate deadlines. They do more damage than good.

Xavier Gonzalez NeurIPS Conference True this. Honestly, what is there to gain from having two deadlines a week apart? No reviewer action happens during that week, and we already know we'll be facing the heaviest submission load, where most reviewers are likely to max out. So having two separate PDFs (or worse, a