Xianjun Yang

@xianjun_agi

RS @AIatMeta. GenAI safety, data-centric AI. Previously Phd @ucsbnlp, BEng @tsinghua_uni. Opinions are my own.

All Watched Over by Machines of Loving Grace.

ID: 1224810594882113536

https://xianjun-yang.github.io/ 04-02-2020 21:43:09

335 Tweet

886 Followers

1,1K Following

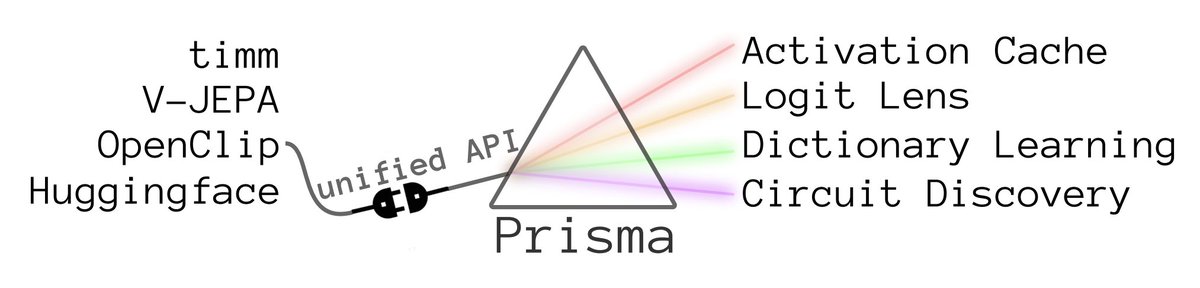

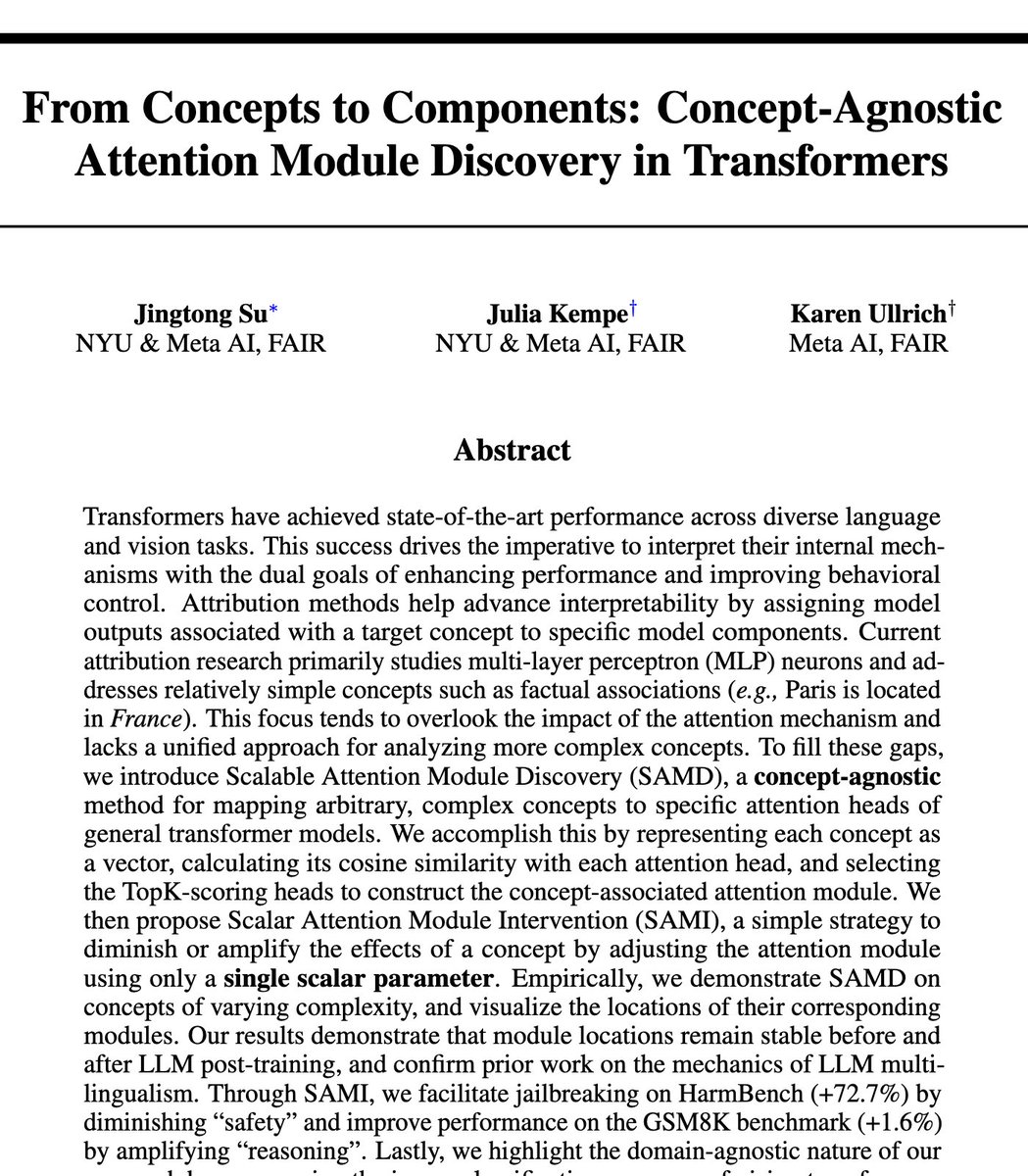

Our paper Prisma: An Open Source Toolkit for Mechanistic Interpretability in Vision and Video received an Oral at the Mechanistic Interpretability for Vision Workshop at CVPR 2025! 🎉 We’ll be in Nashville next week. Come say hi 👋 #CVPR2025 Mechanistic Interpretability for Vision @ CVPR2025