Tim Vieira

@xtimv

machine learning, reinforcement learning, programming languages, handstands (he/him)

ID: 47864778

http://timvieira.github.io/blog 17-06-2009 05:15:39

2,2K Tweet

3,3K Followers

999 Following

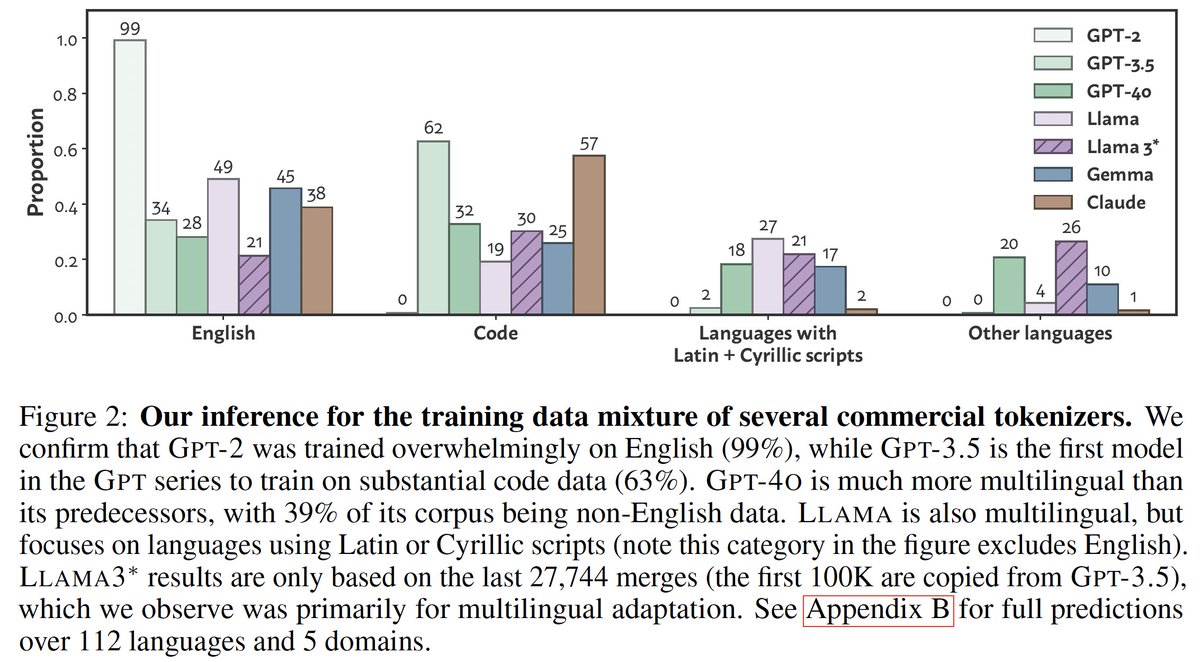

What do BPE tokenizers reveal about their training data?🧐 We develop an attack🗡️ that uncovers the training data mixtures📊 of commercial LLM tokenizers (incl. GPT-4o), using their ordered merge lists! Co-1⃣st Jonathan Hayase arxiv.org/abs/2407.16607 🧵⬇️

Super excited to announce that Microsoft Research's FATE group, Sociotechnical Alignment Center, and friends have several workshop papers at next week's NeurIPS Conference. A short thread about (some of) these papers below... #NeurIPS2024

Tim Vieira and I will be presenting this work at NeurIPS today! Join us from 16:40h at East Exhibition Hall A!

Super excited for the Evaluating Evaluations workshop at NeurIPS Conference today!!! evaleval.github.io #NeurIPS2024 Microsoft Research's FATE group, Sociotechnical Alignment Center, and friends will be presenting several papers there. See below for details...

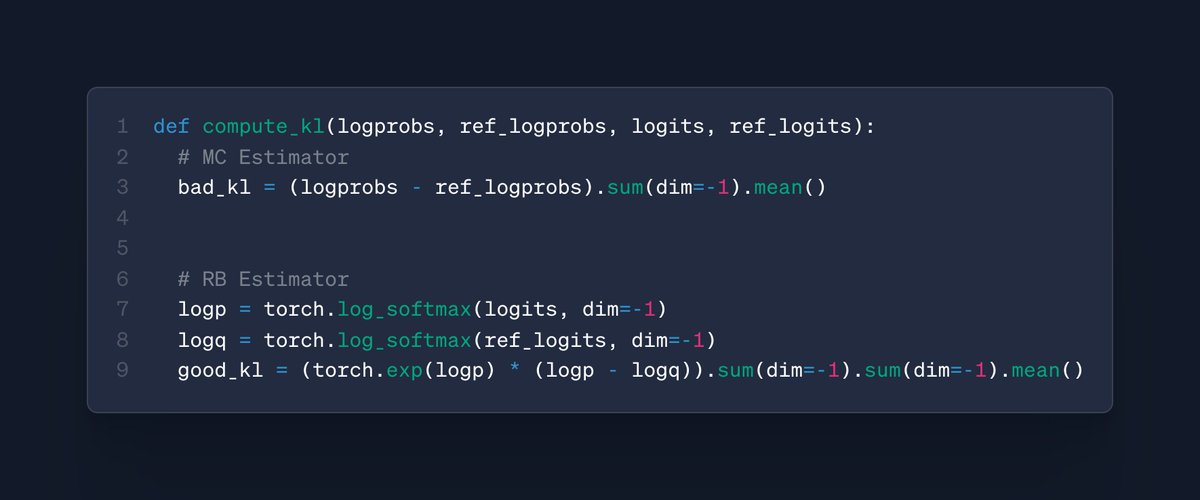

Tim Vieira and I were just discussing this interesting comment in the DeepSeek paper introducing GRPO: a different way of setting up the KL loss. It's a little hard to reason about what this does to the objective. 1/

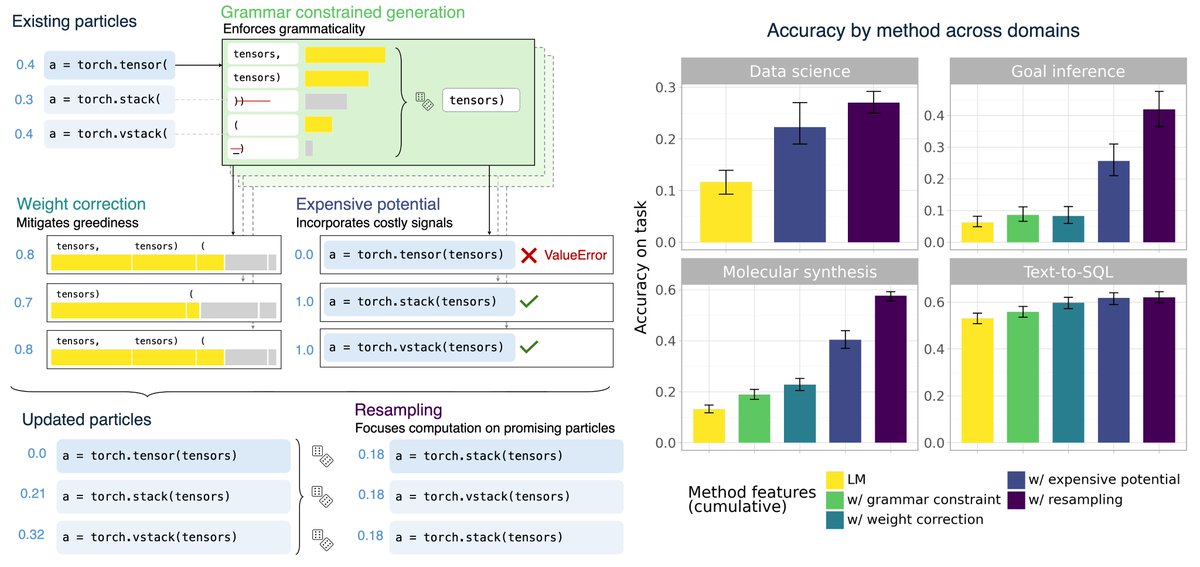

Excited to rep the team behind “Syntactic and Semantic Control of LLMs via Sequential Monte Carlo” ICLR 2026 #ICLR2025!🎲🎛️ Stop by our poster #634 from 10:00am-12:30pm today to chat with co-authors João Loula, Ben LeBrun, Alex Lew, Tim Vieira, Ryan Cotterell & more!

Current KL estimation practices in RLHF can generate high variance and even negative values! We propose a provably better estimator that only takes a few lines of code to implement.🧵👇 w/ Tim Vieira and Ryan Cotterell code: arxiv.org/pdf/2504.10637 paper: github.com/rycolab/kl-rb