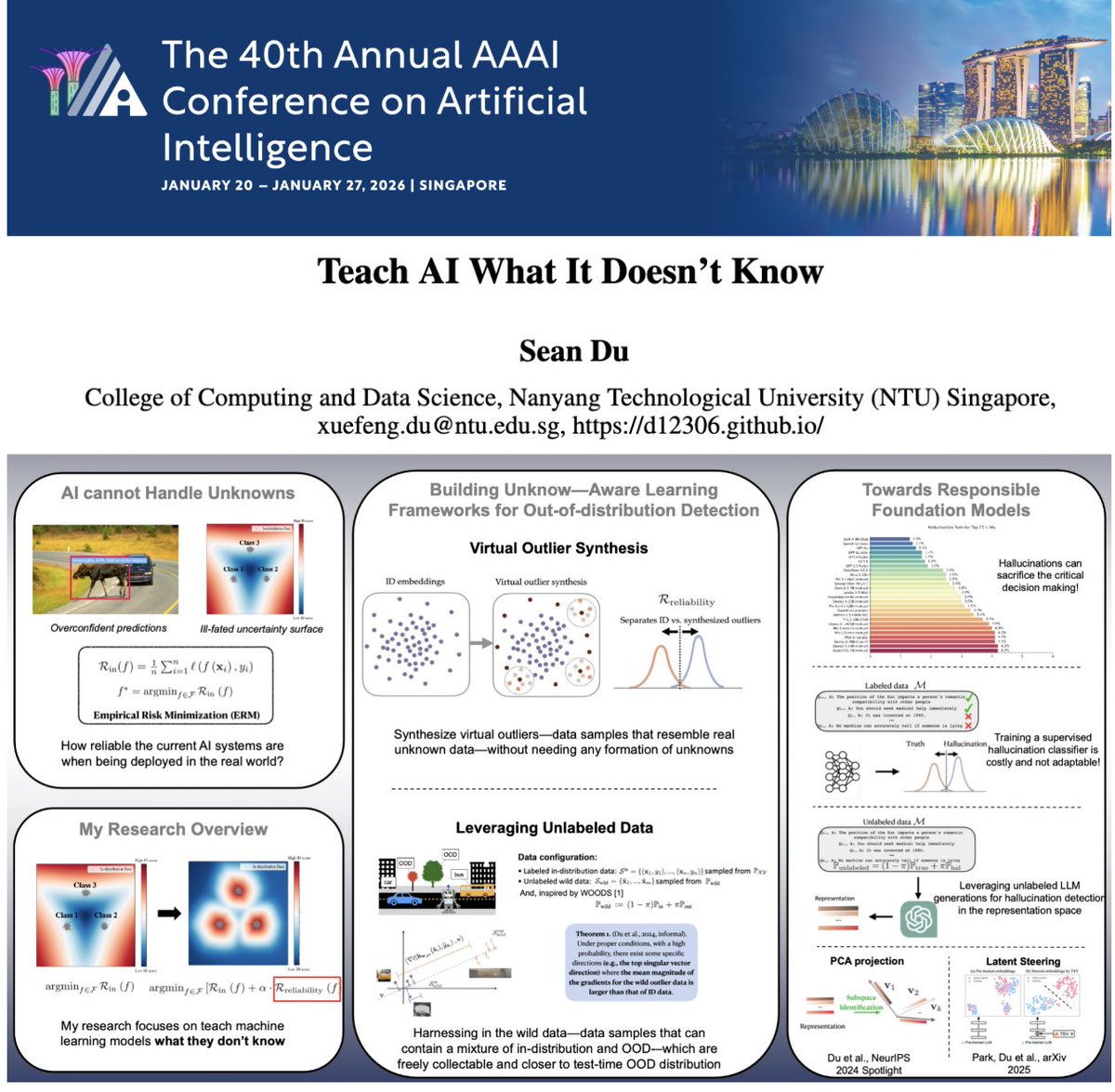

Sean Xuefeng Du

@xuefeng_du

Ph.D. student @WisconsinCS, fellow @JaneStreetGroup, spending time @GoogleAI | reliable machine learning 🤖️ ⛑️

ID: 1095271887465111552

http://d12306.github.io 12-02-2019 10:42:21

208 Tweet

1,1K Followers

2,2K Following

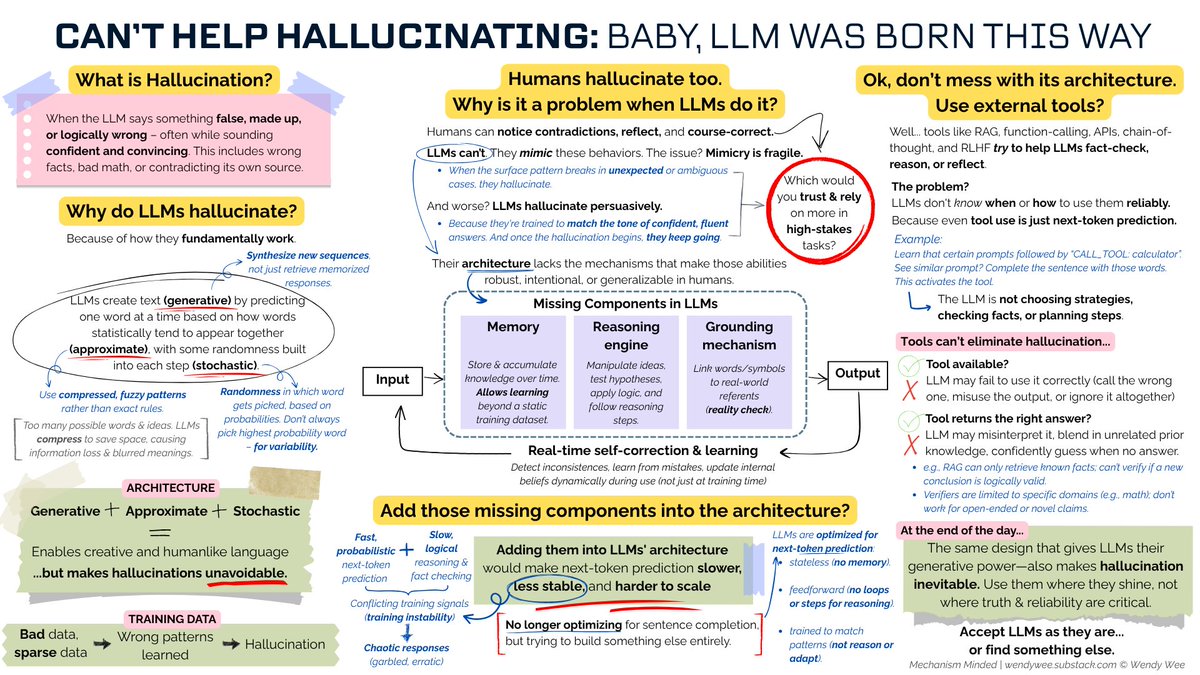

Hallucination is baked into LLMs. Can't be eliminated, it's how they work. Dario Amodei says LLMs hallucinate less than humans. But it's not about less or more. It's the differing & dangerous nature of the hallucination, making it unlikely LLMs will cause mass unemployment (1/n)

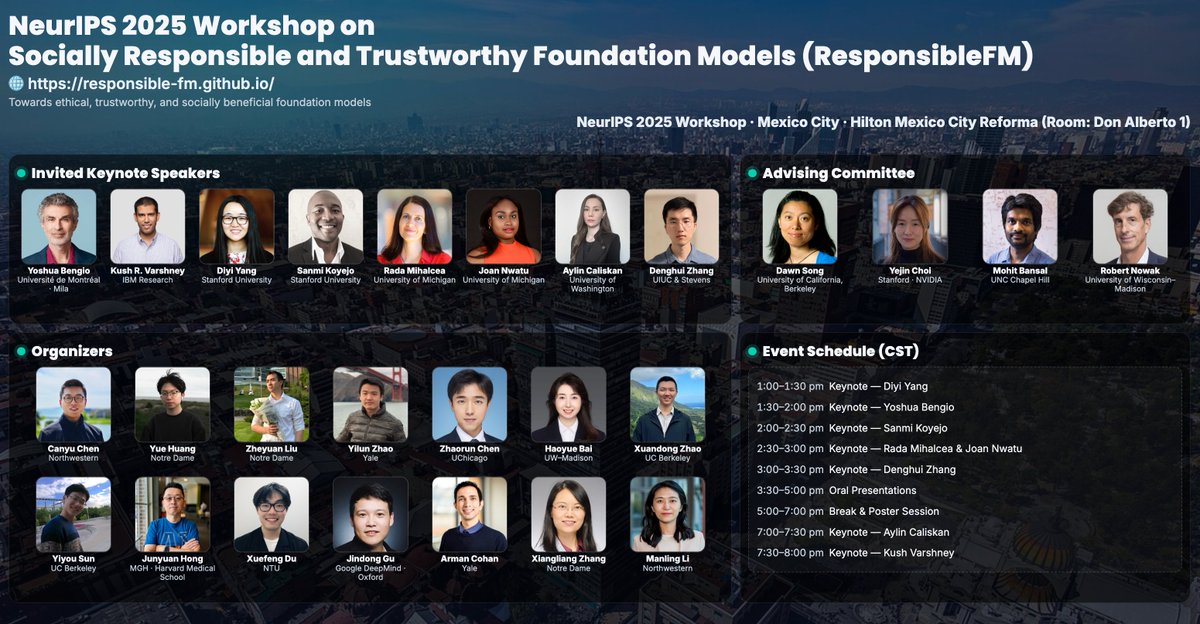

If you research on responsible and trustworthy foundation models, please consider attending and submitting your work to our NeurIPS Conference workshop!! Thanks Canyu Chen and the amazing team for inviting and organizing🥳

Spread the word! 📢 The FATE (Fairness, Accountability, Transparency, and Ethics) group at Microsoft Research in NYC is hiring interns and postdocs to start in summer 2026! 🎉 Apply by *December 15* for full consideration.

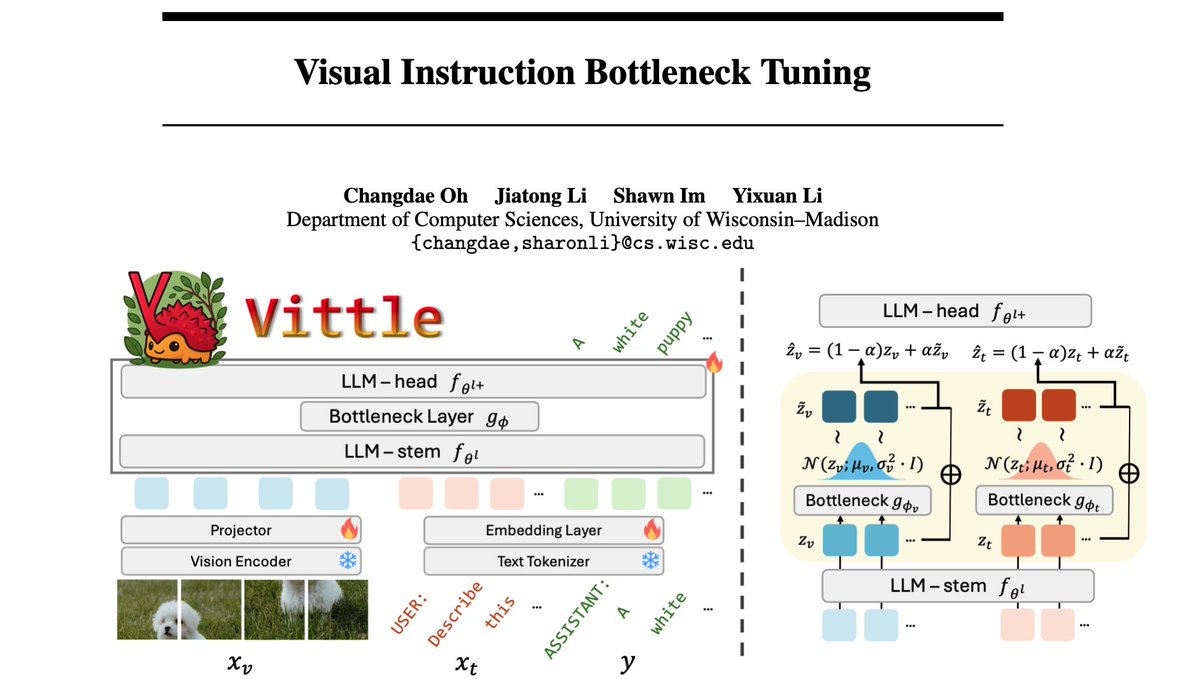

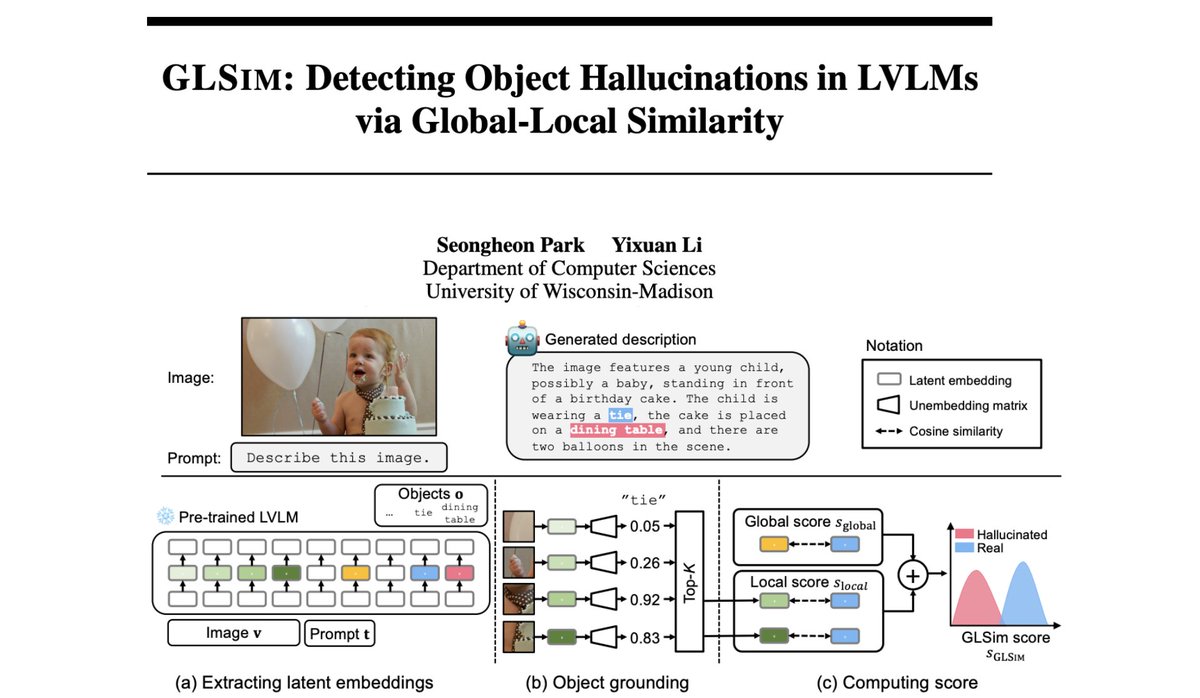

Heading to SD for #NeurIPS2025 soon! Excited that many students will be there presenting: Hyeong-Kyu Froilan Choi, Shawn Im, Leitian Tao@NeurIPS 2025, Seongheon Park Changdae Oh @ NeurIPS 2025 Samuel (Min Hsuan) Yeh Galen Jiatong Li, Wendi Li, xuanming zhang. Let’s enjoy AI conference while it lasts. You can find me at