Zhewei Yao

@yao_zhewei

Working on AI at @snowflakedb, @MSFTDeepSpeed core-contributor, @UCBerkeley Ph.D.

ID: 1240722392902590464

19-03-2020 19:30:51

24 Tweet

104 Followers

91 Following

With over 20K downloads per month, community engagement with the RedPajama-V2 dataset has been incredible. The 30 trillion tokens of data have been used to train leading models like the recently released SnowflakeDB Arctic LLM. We've compiled a list of FAQs for using it here:

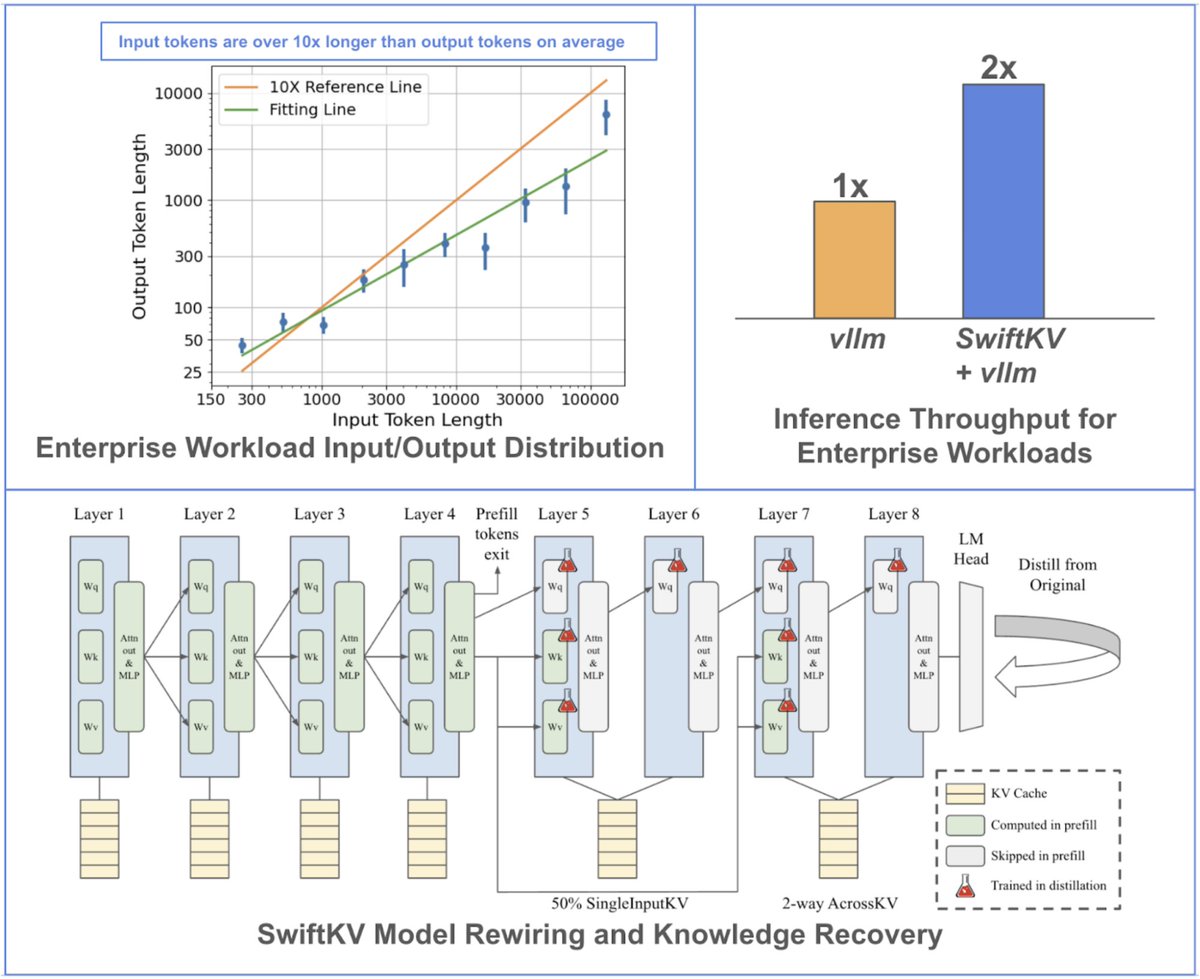

We are excited to share SwiftKV, our recent work at SnowflakeDB AI Research! SwiftKV reduces the pre-fill compute for enterprise LLM inference by up to 2x, resulting in higher serving throughput for input-heavy workloads. 🧵

Do you want ArcticTraining at SnowflakeDB to add an ability to post-train DeepSeek V3/R1 models with DPO using just a few GPU nodes? Please vote here and tell others about it: github.com/snowflakedb/Ar… ArcticTraining is an open-source, easy to use post-training framework