Yash Kant

@yash2kant

ai phd @uoftcompsci // prev @meta @snap @georgiatech // now on job market! // web: yashkant.github.io

ID: 352228898

http://yashkant.github.io 10-08-2011 09:51:46

1,1K Tweet

850 Followers

492 Following

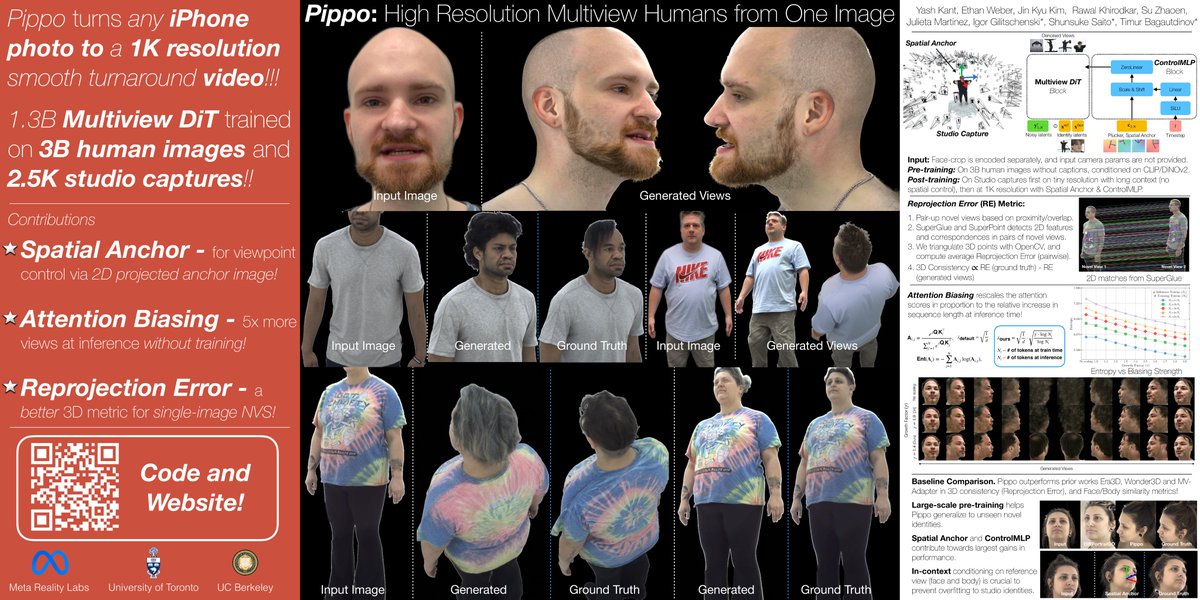

I will be at #CVPR25! 🤗 Please come chat with me and Ethan Weber - during our poster session for Pippo, on Sat 5-7pm (Hall D)! 👋

Thrilled to share the papers that our lab will present at #CVPR2025. Learn more in this thread 🧵 and meet Kai He, Yash Kant @CVPR25, Ziyi Wu @ CVPR'25, and our previous visitor Toshiya Yura in Nashville! 1/n

Grateful to everyone who stopped by our oral presentation and posters during the whirlwind of #CVPR2025 — we know you had plenty of options! I'm at the Eyeline Studios Booth (1209) from now–3pm today. Come say hi — I’d love to chat about our research philosophy and how it ties

🚨🎬 MiniMax Hailuo AI (MiniMax) 's latest video model, Hailuo 02, is now available exclusively on fal's API platform. For just 28 cents per video, you can generate these beautiful shots with our API. fal.ai/models/fal-ai/… Links and examples below 👇👇

I'm attending Robotics: Science and Systems for the first time #RSS2025 in LA Excited to be giving two invited talks, at the Continual Learning and EgoAct workshops on Sat, June 21 I'll share the latest on 2D/3D motion prediction from human videos for manipulation! Do drop by and say hi :)

The latest research paper from Eyeline Studios, FlashDepth, has been accepted to the International Conference on Computer Vision (#ICCV2025). Our model produces accurate and high-resolution depth maps from streaming videos in real time and is completely built on open-source

Dear NeurIPS Conference -- it seems OpenReview is down entirely, and we cannot submit reviews for the upcoming review deadline tonight. Please share if you are having a similar issue. #neurips2025