Yin Aphinyanaphongs

@yindalon

ID: 61320791

29-07-2009 23:05:25

1,1K Tweet

178 Followers

100 Following

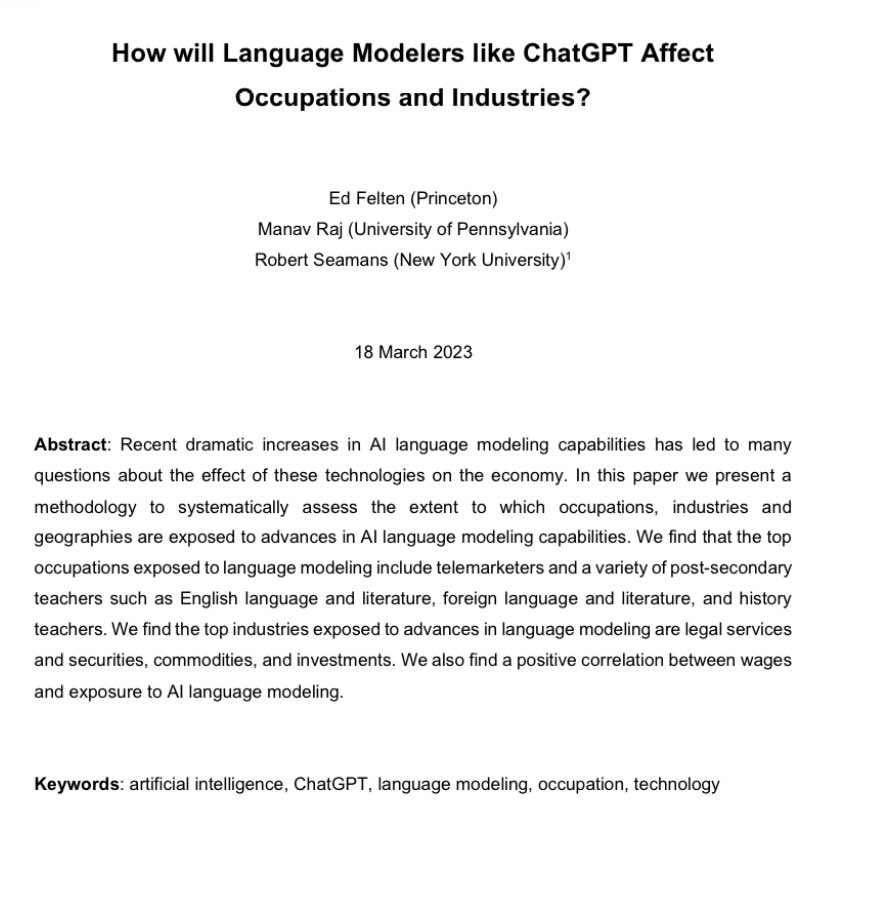

Economist Joshua Gans uses o1-pro to generate a (minor, fun) paper in an hour based on an idea of his, and it gets published in an appropriate peer reviewed journal, with adequate disclosure. He ends with the same sentiment I am increasingly seeing from fellow academics: what now?

Also these results were predicted by Daniel Rock & Rob Seamans and co-authors well over a year ago. Nice validation of early studies of the impact of generative AI. Probably worth checking out what fields they see impacted next.