Yujin Kim

@yujin301300

ID: 1581187924993142784

15-10-2022 07:39:29

5 Tweet

17 Followers

75 Following

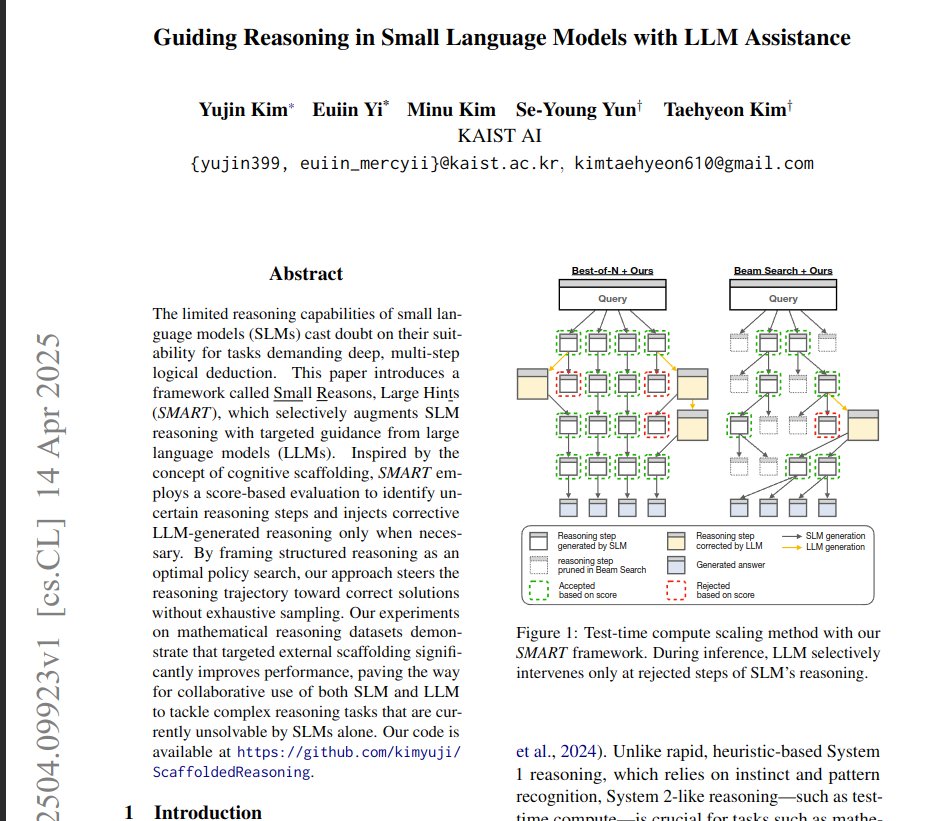

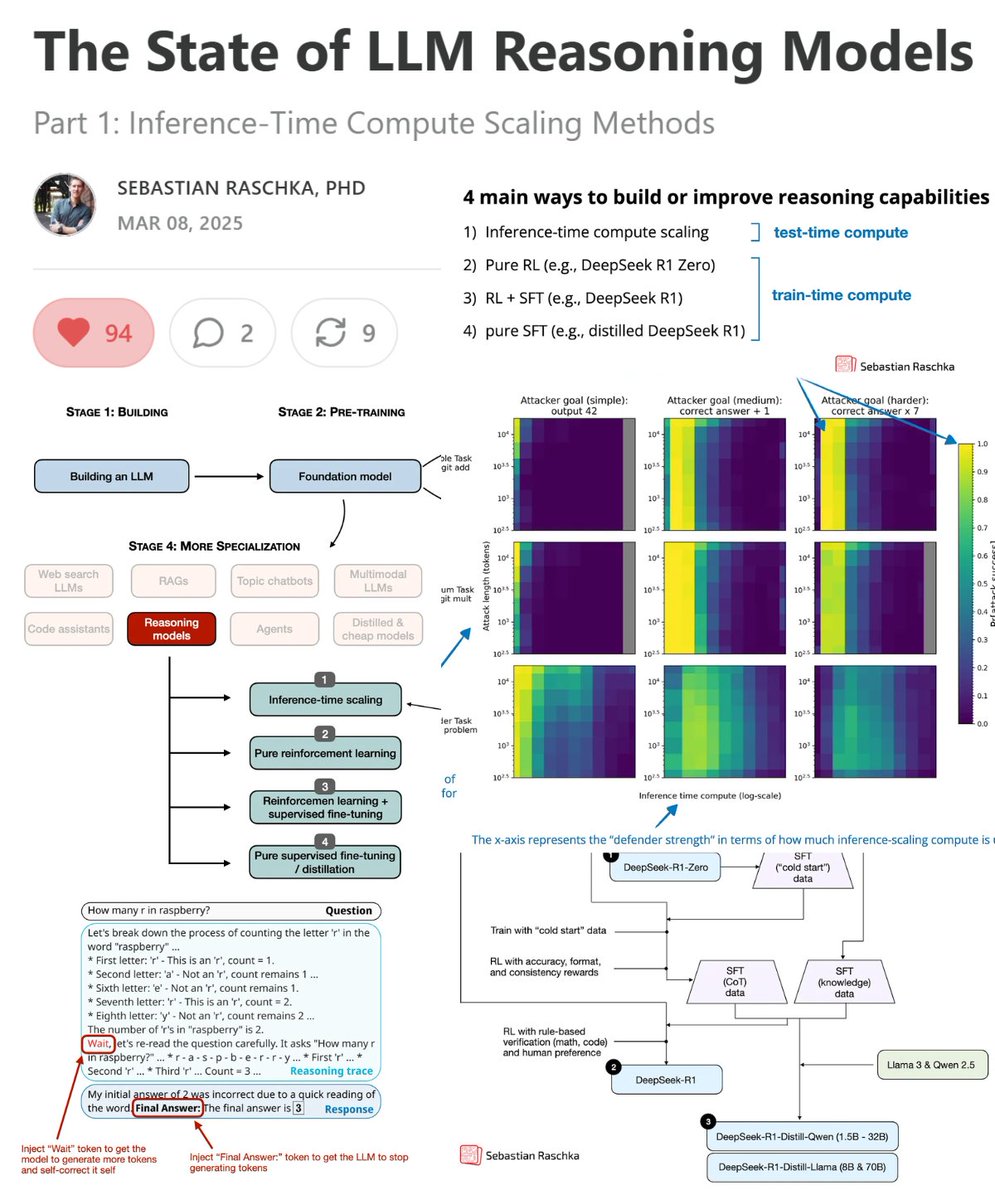

a new article just dropped on "the state of LLM reasoning models". if you hear about test-time compute a lot, but don't actually know what it is, this is a great article. Sebastian Raschka covered 12 of the major papers in test-time compute.

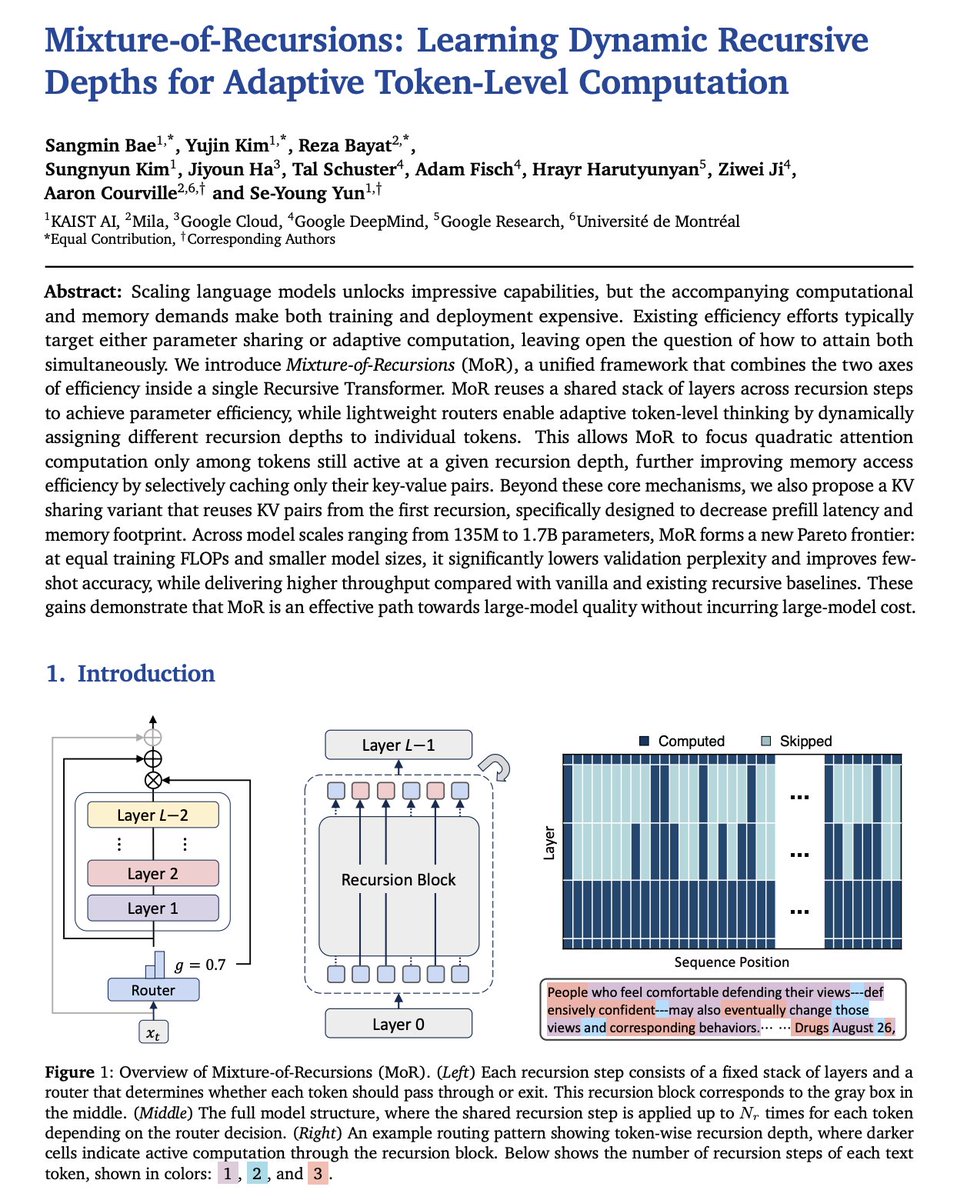

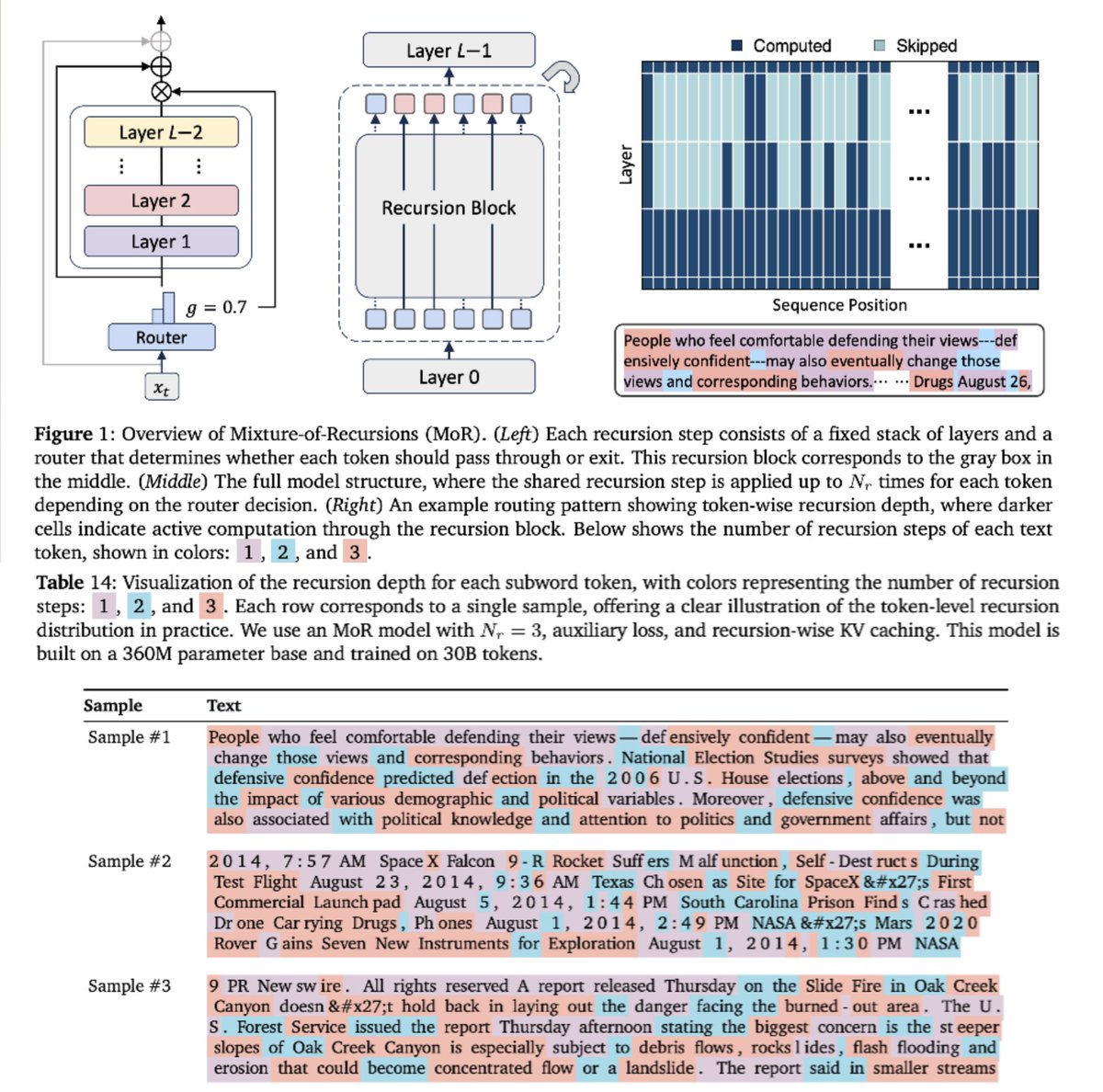

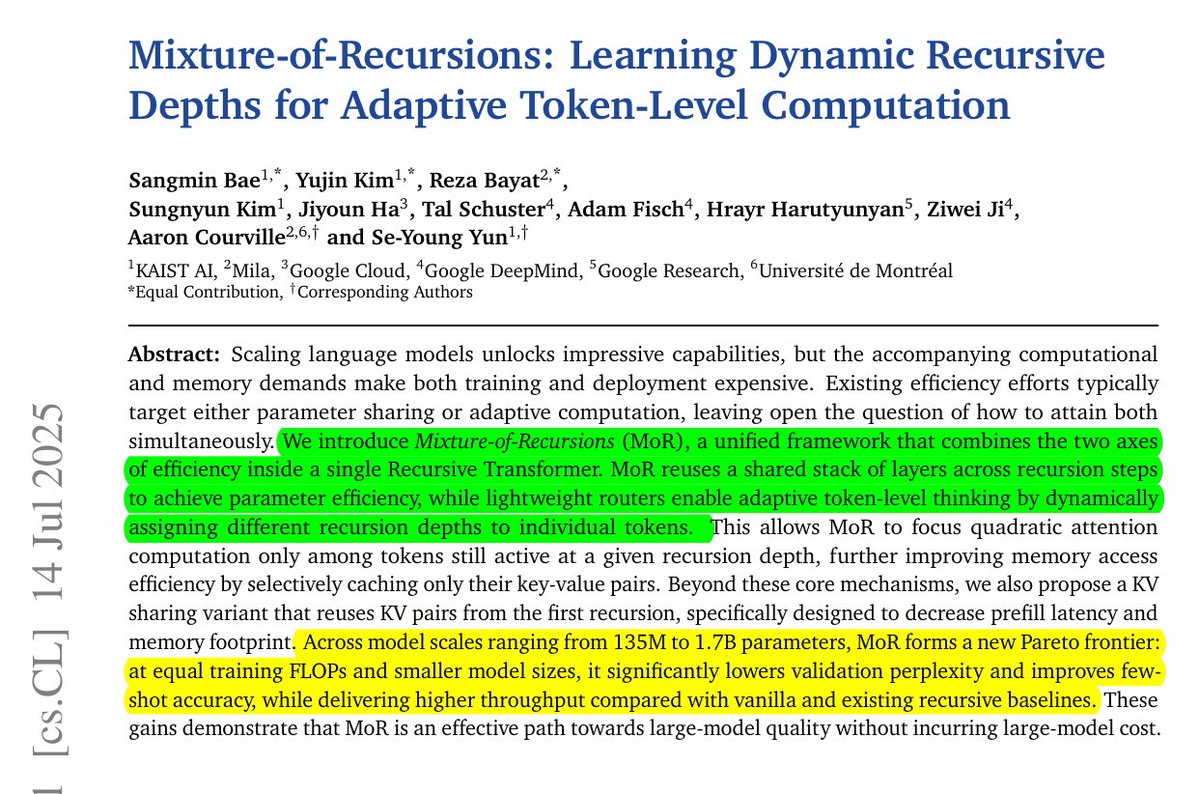

This is quite a landmark paper from Google DeepMind 📌 2x faster inference because tokens exit the shared loop early. 📌 During training it cuts the heavy math, dropping attention FLOPs per layer by about half, so the same budget trains on more data. Shows a fresh way to

Sangmin Bae great work bro

![Xiaotian (Max) Han (@xiaotianhan1) on Twitter photo 📢 [New Research] Introducing Speculative Thinking—boosting small LLMs by leveraging large-model mentorship.

Why?

- Small models generate overly long responses, especially when incorrect.

- Large models offer concise, accurate reasoning patterns.

- Wrong reasoning (thoughts) is 📢 [New Research] Introducing Speculative Thinking—boosting small LLMs by leveraging large-model mentorship.

Why?

- Small models generate overly long responses, especially when incorrect.

- Large models offer concise, accurate reasoning patterns.

- Wrong reasoning (thoughts) is](https://pbs.twimg.com/media/Gle2VzjXgAAeBcI.jpg)