Yuke Zhu

@yukez

Assistant Professor @UTCompSci | Co-Leading GEAR @NVIDIAAI | CS PhD @Stanford | Building generalist robot autonomy in the wild | Opinions are my own

ID: 15751831

https://yukezhu.me 06-08-2008 16:25:20

335 Tweet

18,18K Followers

461 Following

For the past two years, Jake Grigsby and I have been exploring how to make Transformer-based RL scales the same way as supervised learning counterparts. Our AMAGO line of work shows promise for building RL generalists in multi-task settings. Meet him and chat at #NeurIPS2024!

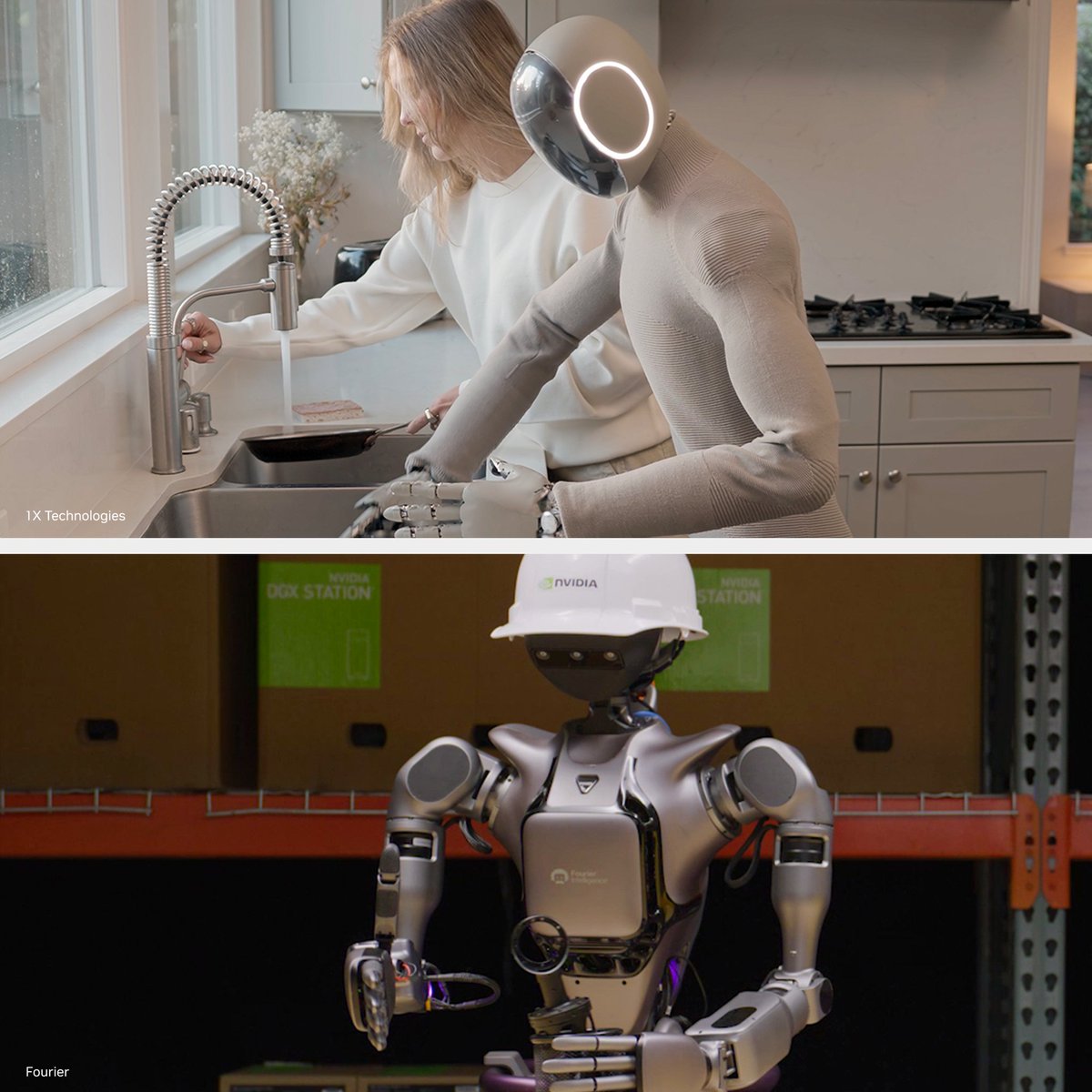

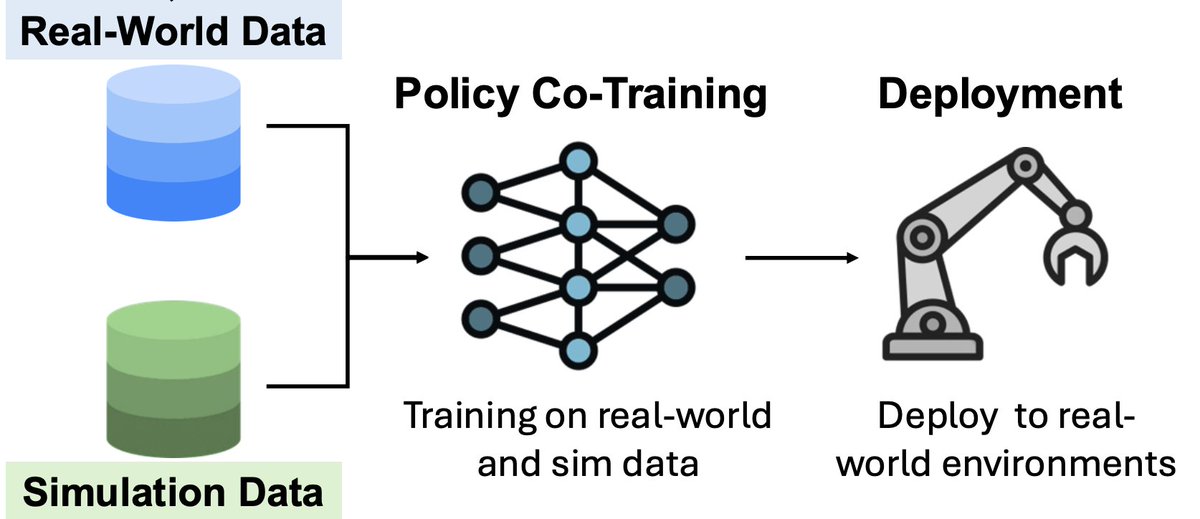

Full episode dropping tomorrow! Geeking out with Toru on toruowo.github.io/recipe/ (Sim-to-Real Reinforcement Learning for Vision-Based Dexterous Manipulation on Humanoids). Co-hosted by Chris Paxton & Michael Cho - Rbt/Acc