Yuda Song @ ICLR 2025

@yus167

PhD @mldcmu. Previously @ucsd_cse @UcsdMathDept

ID: 1250678066742874113

https://yudasong.github.io 16-04-2020 06:51:08

113 Tweet

359 Followers

260 Following

new preprint with the amazing Luca Viano and Gergely Neu on offline imitation learning! when the expert is hard to represent but the environment is simple, estimating a Q-value rather than the expert directly may be beneficial. there are many open questions left though!

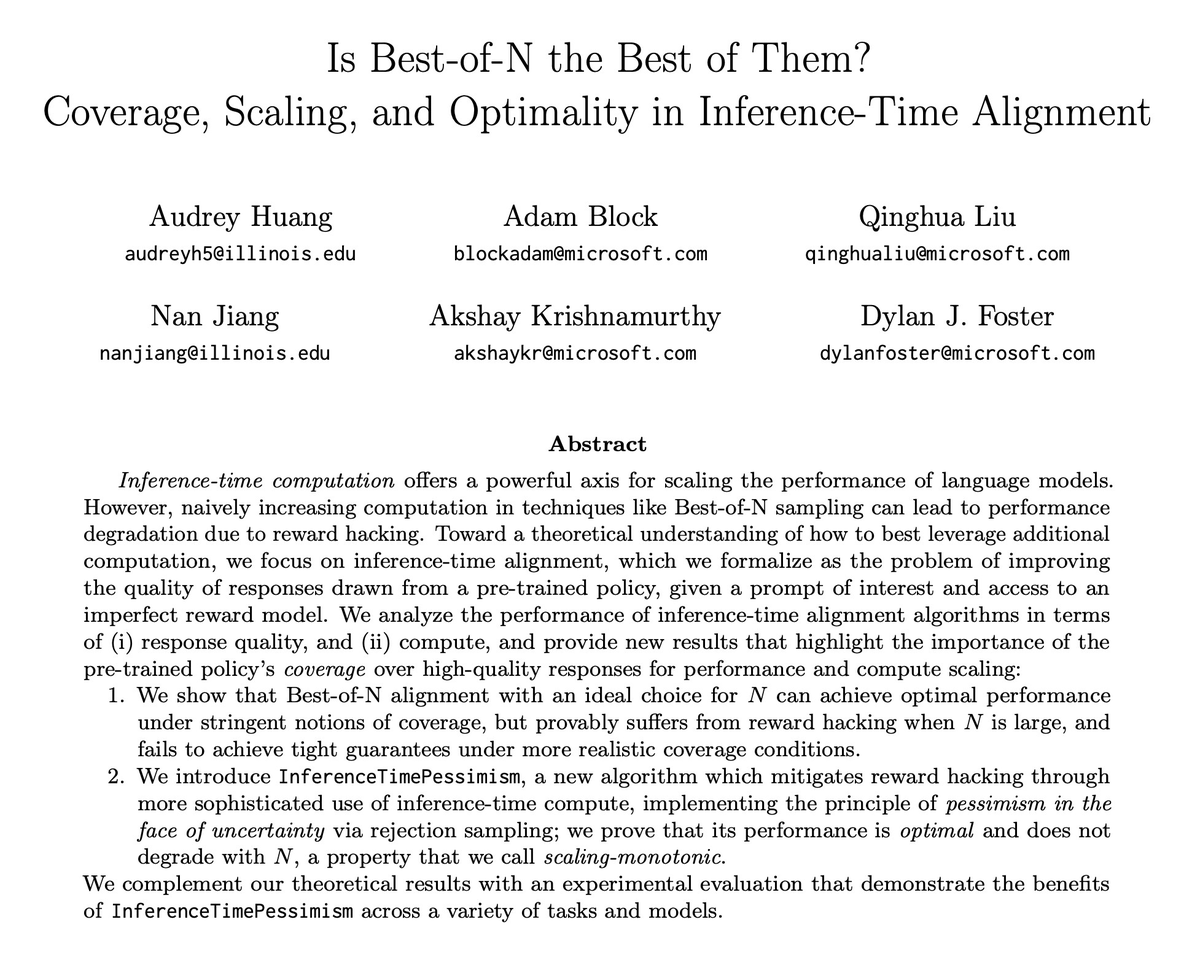

Still noodling on this, but the generation-verification gap proposed by Yuda Song Hanlin Zhang Sham Kakade Udaya Ghai et al. in arxiv.org/abs/2412.02674 is a very nice framework that unifies a lot of thoughts around self-improvement/verification/bootstrapping reasoning

Discussing "Mind the Gap" tonight at Haize Labs's NYC AI Reading Group with Leonard Tang and will brown. Authors study self-improvement through the "Generation-Verification Gap" (model's verification ability over its own generations) and find that this capability log scales with