Yu Zhao

@yuzhaouoe

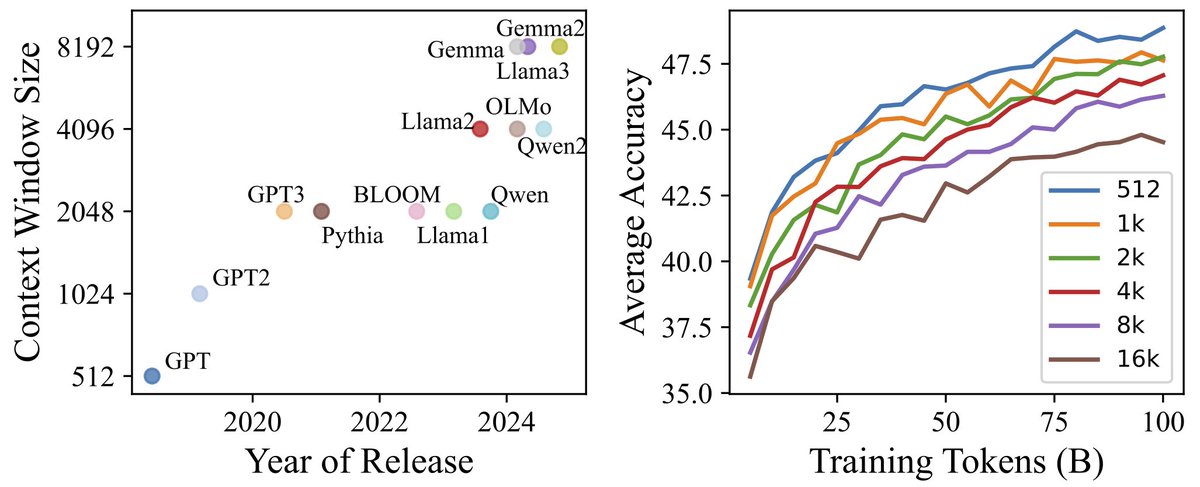

@EdinburghNLP NLP/ML | Opening the Black Box for Efficient Training/Inference

ID: 1511963648826097670

https://yuzhaouoe.github.io/ 07-04-2022 07:06:54

188 Tweet

351 Followers

593 Following

Still ~10 days to apply! Fully funded until 2029, and includes access to our HPC infra and some amazing teams! EdinburghNLP

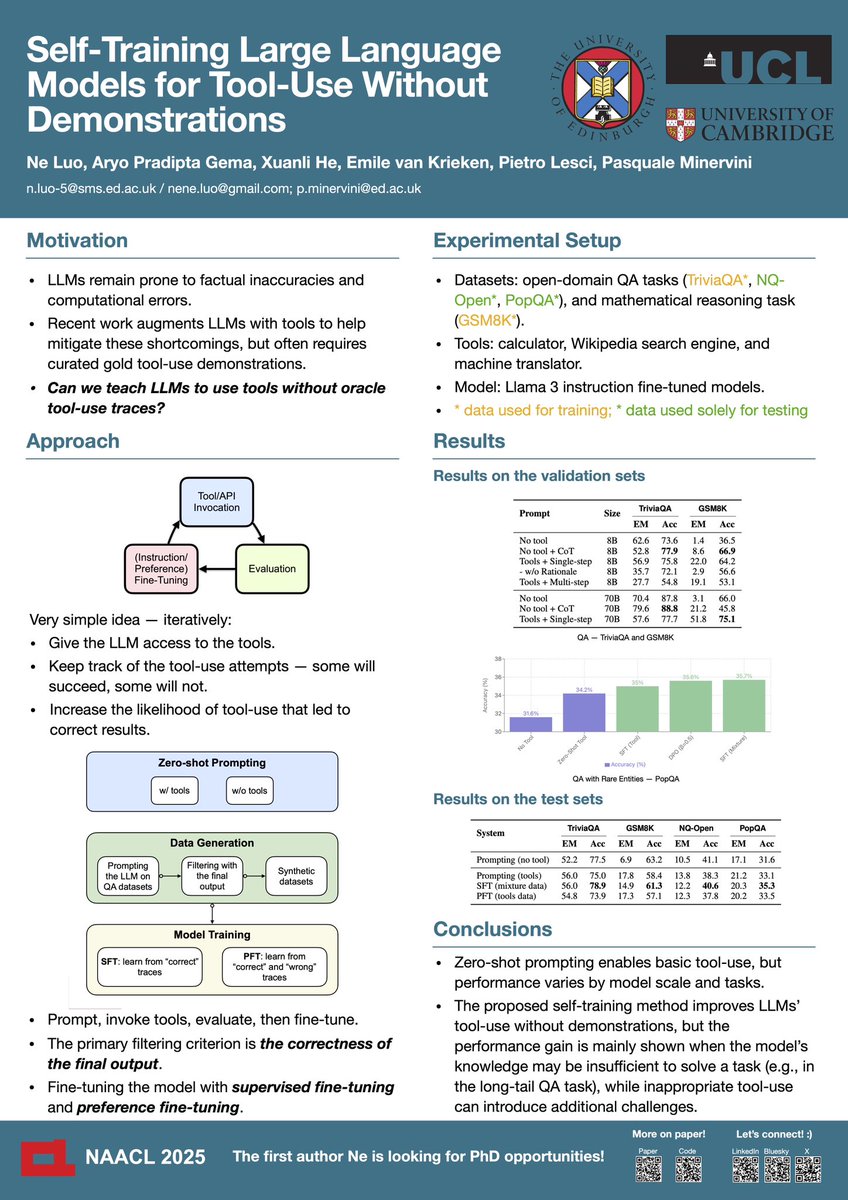

Hi! I will be attending #NAACL2025 and presenting our paper on self-training for tool-use today, an extended work of my MSc dissertation at EdinburghNLP, supervised by Pasquale Minervini is hiring postdocs! 🚀. Time: 14:00-15:30 Location: Hall 3 Let’s chat and connect!😊