Dinghuai Zhang 张鼎怀

@zdhnarsil

Researcher at @MSFTResearch. Prev: PhD at @Mila_Quebec, intern at @Apple MLR and FAIR Labs @MetaAI, math undergraduate at @PKU1898.

ID: 2489747113

http://zdhnarsil.github.io 11-05-2014 11:55:14

652 Tweet

3,3K Followers

1,1K Following

Chenghao Liu will work with FutureHouse and Nobel laureate Frances Arnold Frances Arnold at Caltech to develop closed-loop generative machine learning workflows for de novo enzyme discovery. Chenghao was co-founder of Dreamfold and known for combining physical organic chemistry

Excited to share our work with my amazing collaborators, Goodeat, Xingjian Bai, Zico Kolter, and Kaiming. In a word, we show an “identity learning” approach for generative modeling, by relating the instantaneous/average velocity in an identity. The resulting model,

Happy to share that our paper "The ICML 2023 Ranking Experiment: Examining Author Self-Assessment in ML/AI Peer Review" will appear in JASA as a Discussion Paper: arxiv.org/abs/2408.13430 It's a privilege to work with such a wonderful team: Buxin, Jiayao, Natalie Collina,

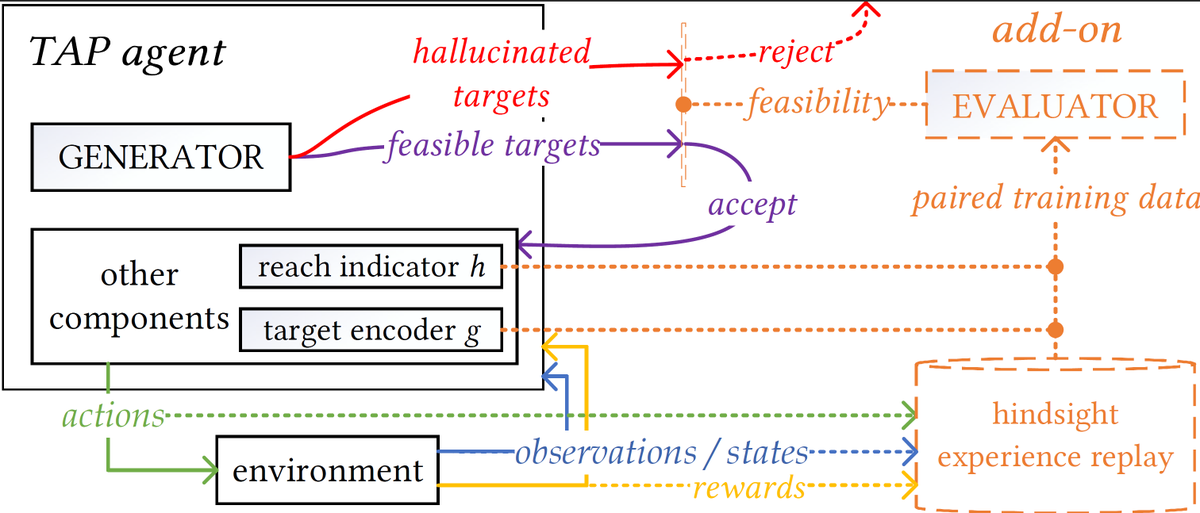

Our paper on rejecting hallucinated planning targets is now accepted at ICML Conference 2025! 📜: arxiv.org/abs/2410.07096 💿: github.com/mila-iqia/delu… "Rejecting Hallucinated State Targets during Planning" - Authors: Harry Zhao, Tristan, Romain Laroche, Doina Precup, Yoshua Bengio