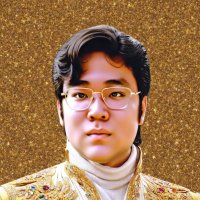

Zhengfei Kuang

@zfkuang1

Ph.D. student at Stanford University

ID: 1158430116998811652

http://zhengfeikuang.com 05-08-2019 17:30:37

15 Tweet

151 Followers

90 Following

Check out our SIGGRAPH ASIA 2020 Paper: Dynamic Facial Asset and Rig Generation from a Single Scan! Great collaboration with Zhengfei Kuang, Real Yajie Zhao, Mingming He, Karl Bladin, and Hao Li! Demo: youtube.com/watch?v=VV655f… Paper: arxiv.org/pdf/2010.00560…

#CVPR Can we estimate human motion from egocentric videos? Check out our Ego-Body Pose Estimation via Ego-Head Pose Estimation (Award Candidate)! w Karen and Jiajun Wu. Poster: THU-AM-063. (I will be on Zoom for Q&A!) Project page: (code is released!) lijiaman.github.io/projects/egoeg…

Accepted to CVPR! 😇 #CVPR2025 #CVPR2024 Congrats to the team at Stanford and HKUST: Zifan Shi Yifan Wang Yinghao Xu Ryan Po Zhengfei Kuang Qifeng Chen Dit-Yan Yeung and Gordon Wetzstein See you in Seattle!

#ECCV24 We introduce Controllable Human-Object Interaction Synthesis (CHOIS) to generate interactions given text in a 3D scene. w alex, Roozbeh Mottaghi Jiajun Wu Xavier Puig Karen. code: github.com/lijiaman/chois… project page: lijiaman.github.io/projects/chois/