Zixuan Zhang

@zhangzxuiuc

NLP Researcher,

PhD Candidate @ CS UIUC (zhangzx-uiuc.github.io)

ID: 1493395075497398272

15-02-2022 01:21:56

6 Tweet

66 Followers

61 Following

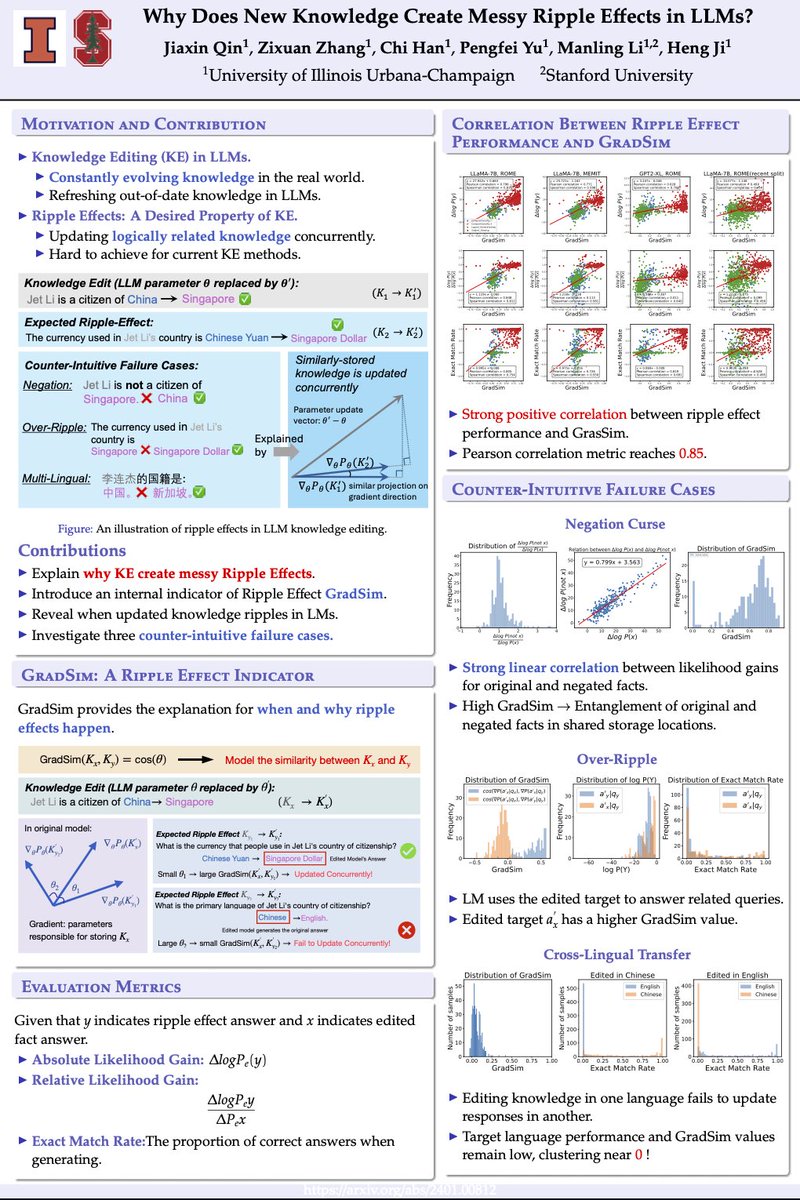

I am at #EMNLP2024! I will present our work "Why Does New Knowledge Create Messy Ripple Effects in LLMs? " on Web 10:30am. Thanks to all the collaborators Heng Ji Zixuan Zhang Chi Han Manling Li Looking forward to have a chat! Paper Link: arxiv.org/pdf/2407.12828