Martin Ziqiao Ma

@ziqiao_ma

〽️ PhD @UMichCSE | 💼 @IBM @Adobe @Amazon | @ACLMentorship | Weinberg Cogsci Fellow | Cogsci x Multimodality | 💬 Language Grounding & Alignment to 👥 & 👀.

ID: 1194045284621438980

http://ziqiaoma.com/ 12-11-2019 00:12:44

786 Tweet

2,2K Followers

1,1K Following

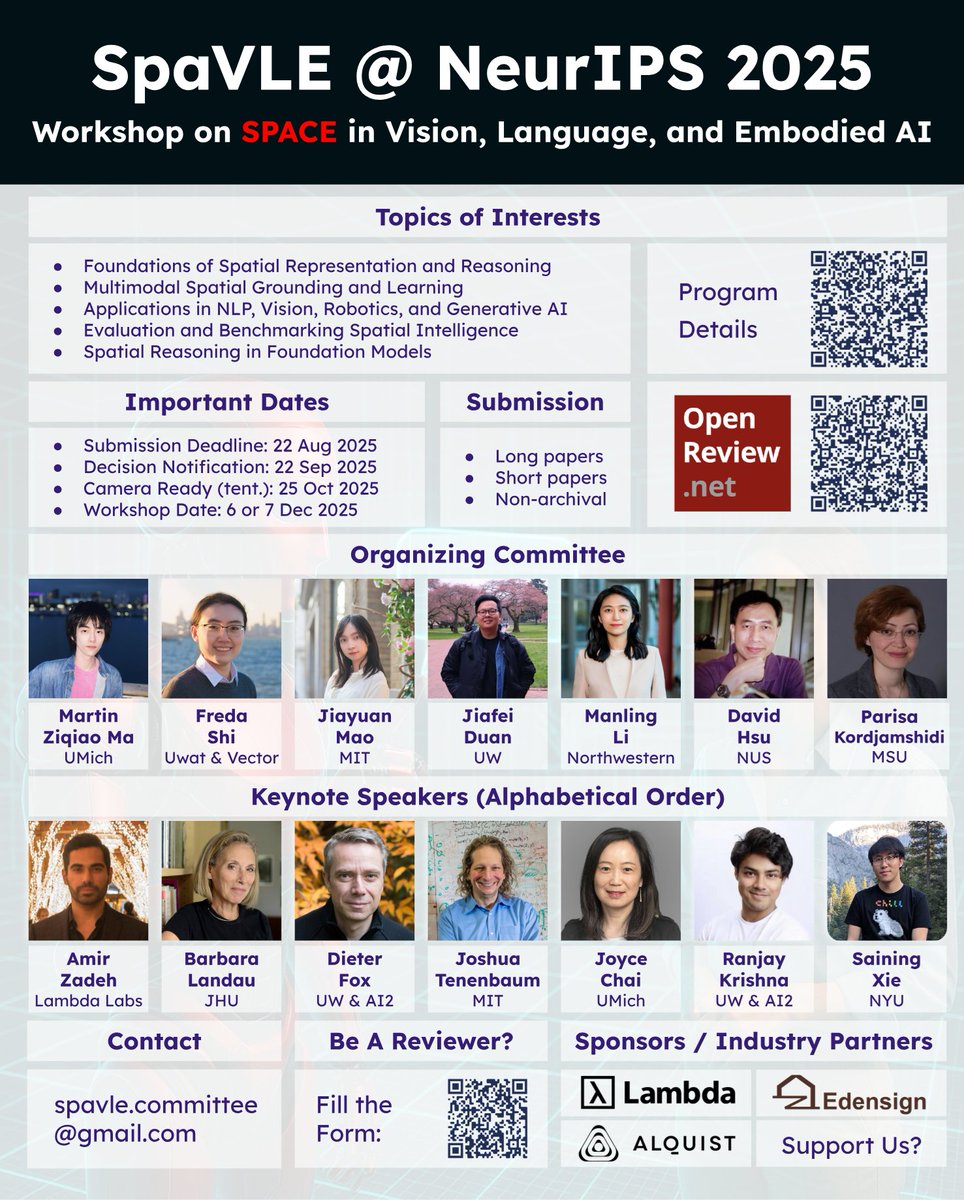

📣 Excited to announce SpaVLE: #NeurIPS2025 Workshop on Space in Vision, Language, and Embodied AI! 👉 …vision-language-embodied-ai.github.io 🦾Co-organized with an incredible team → Freda Shi · Jiayuan Mao · Jiafei Duan · Manling Li · David Hsu · Parisa Kordjamshidi 🌌 Why Space & SpaVLE? We