José Maria Pombal

@zmprcp

Research Scientist @unbabel, PhD student @istecnico.

ID: 1633224223454797826

http://zeppombal.github.io 07-03-2023 21:53:17

53 Tweet

81 Followers

103 Following

Good to see European Commission promoting OS LLMs in Europe. However (1) "OpenEuroLLM" is appropriating a name (#EuroLLM) which already exists, (2) it is certainly *not* the "first family of open-source LLMs covering all EU languages" 🧵

Brilliant and necessary work by José Maria Pombal et al. about metric interference in MT system development and evaluation: arxiv.org/abs/2503.08327 Are we developing better systems or are we just gaming the metrics? And how do we address this? Super (m)interesting! 👀

Our pick of the week by Andrea Piergentili: "Adding Chocolate to Mint: Mitigating Metric Interference in Machine Translation" by José Pombal, Nuno M. Guerreiro, Ricardo Rei, and Andre Martins (2025). #mt #translation #metric #machinetranslation

.Unbabel exposes 🔎 how using the same metrics for both training and evaluation can create misleading ⚠️ #machinetranslation performance estimates and proposes how to solve this with MINTADJUST. José Maria Pombal Ricardo Rei Andre Martins #translation #xl8 #MT slator.ch/UnbabelBiasAIT…

Here's our new paper on m-Prometheus, a series of multulingual judges! 1/ Effective at safety & translation eval 2/ Also stands out as a good reward model in BoN 3/ Backbone model selection & training on natively multilingual data is important Check out José Maria Pombal 's post!

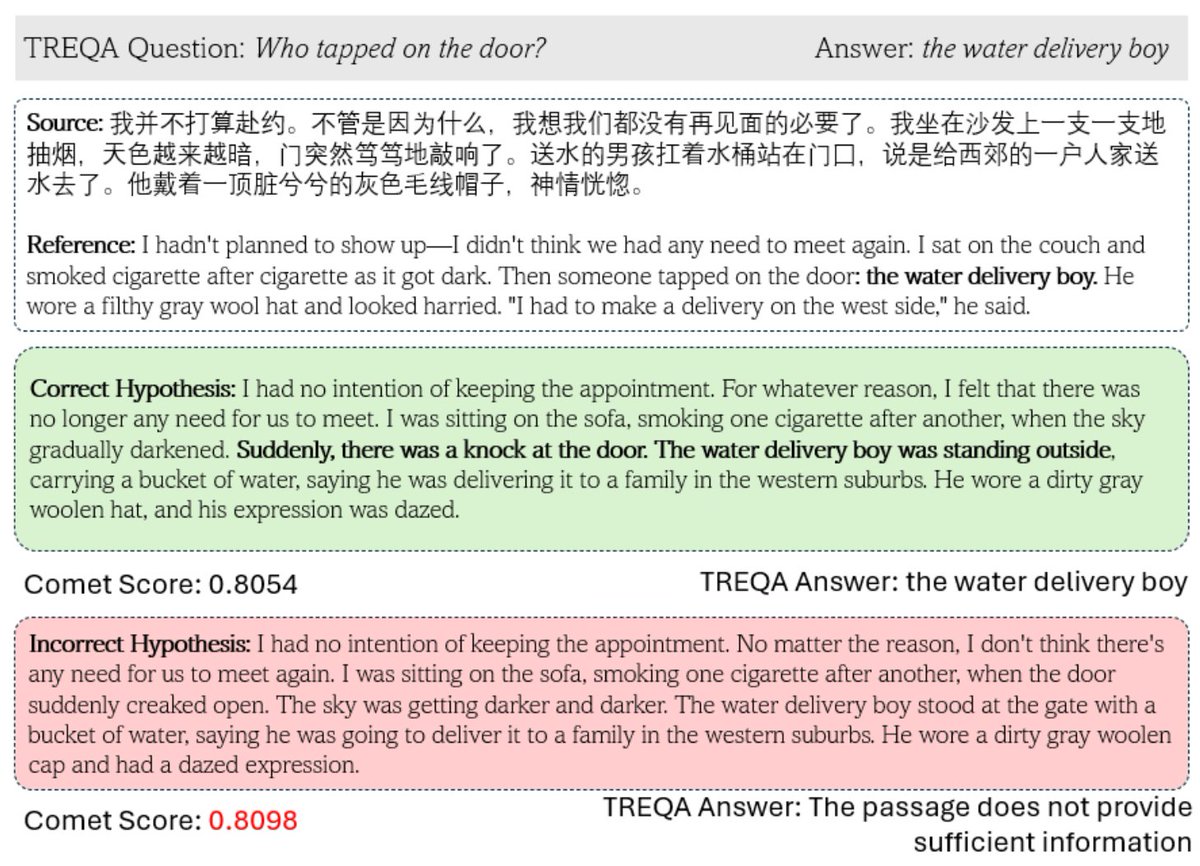

MT metrics excel at evaluating sentence translations, but struggle with complex texts We introduce *TREQA* a framework to assess how translations preserve key info by using LLMs to generate & answer questions about them arxiv.org/abs/2504.07583 (co-lead Sweta Agrawal) 1/15