Zirui "Colin" Wang

@zwcolin

Incoming CS PhD @Berkeley_EECS; MSCS @princeton_nlp; '25 @siebelscholars; prev @HDSIUCSD; I work on multimodal foundation models; He/Him.

ID: 2986434572

http://ziruiw.net 17-01-2015 04:18:40

122 Tweet

1,1K Followers

528 Following

🔔 I'm recruiting multiple fully funded MSc/PhD students University of Alberta for Fall 2025! Join my lab working on NLP, especially reasoning and interpretability (see my website for more details about my research). Apply by December 15!

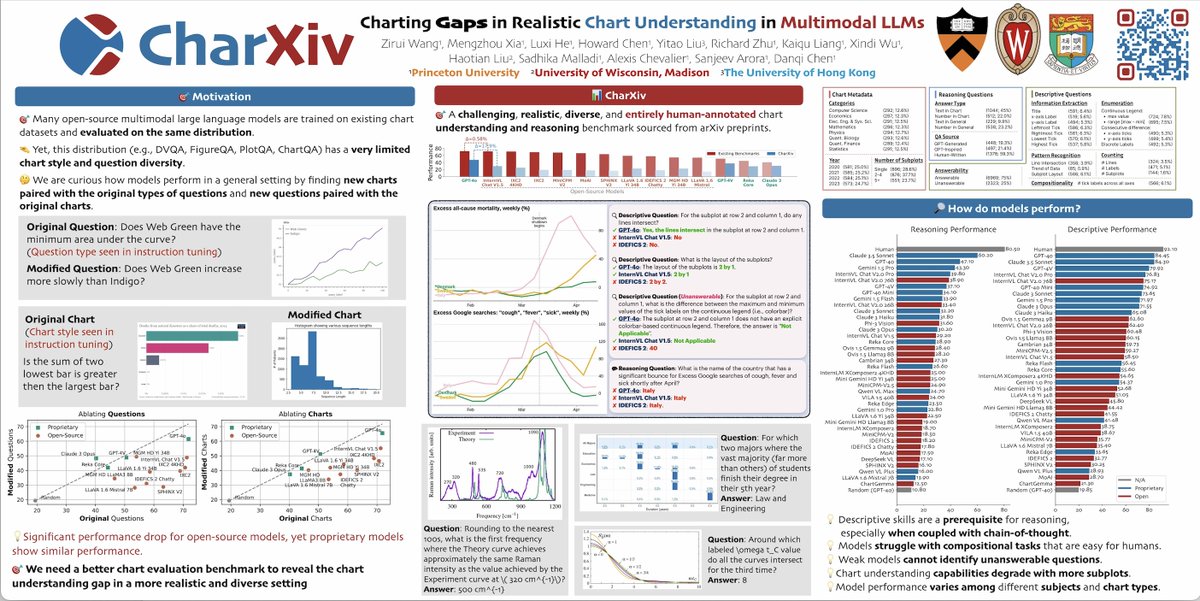

I’ve just arrived in Vancouver and am excited to join the final stretch of #NeurIPS2024! This morning, we are presenting 3 papers 11am-2pm: - Edge pruning for finding Transformer circuits (#3111, spotlight) Adithya Bhaskar - SimPO (#3410) Yu Meng @ ICLR'25 Mengzhou Xia - CharXiv (#5303)