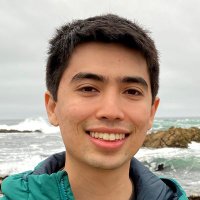

Nishant Balepur

@nishantbalepur

CS PhD Student. Trying to find that dog in me @UofMaryland. Babysitting (aligning) + Bullying (evaluating) #LLMs

ID: 768905924475879425

https://nbalepur.github.io/ 25-08-2016 20:20:32

985 Tweet

534 Followers

403 Following

🧵 Multilingual safety training/eval is now standard practice, but a critical question remains: Is multilingual safety actually solved? Our new survey with Cohere Labs answers this and dives deep into: - Language gap in safety research - Future priority areas Thread 👇

I'm excited to share that I’ll be joining Univ. of Maryland as an Assistant Professor in Computer Science, where I’ll be launching the Resilient AI and Grounded Sensing Lab. The RAGS Lab will build AI that works in chaotic environments. If you would like to partner, please DM me!

𝐖𝐡𝐚𝐭 𝐇𝐚𝐬 𝐁𝐞𝐞𝐧 𝐋𝐨𝐬𝐭 𝐖𝐢𝐭𝐡 𝐒𝐲𝐧𝐭𝐡𝐞𝐭𝐢𝐜 𝐄𝐯𝐚𝐥𝐮𝐚𝐭𝐢𝐨𝐧? I'm happy to announce that the preprint release of my first project is online! Developed with the amazing support of Abhilasha Ravichander and Ana Marasović (Full link below 👇)

I'm now a Ph.D. candidate! 🎉🥳 A few weeks ago, I proposed my thesis: "Teaching AI to Answer Questions with Reasoning that Actually Helps You". Thanks to my amazing committee + friends UMD CLIP Lab! 🫶 I won't be back in Maryland for a while, some exciting things coming soon 👀