Afra Amini

@afra_amini

Ph.D. student at ETH AI Center, ex-intern @GoogleDeepMind

ID: 1439874627434582017

https://afraamini.github.io/ 20-09-2021 08:50:47

40 Tweet

420 Followers

276 Following

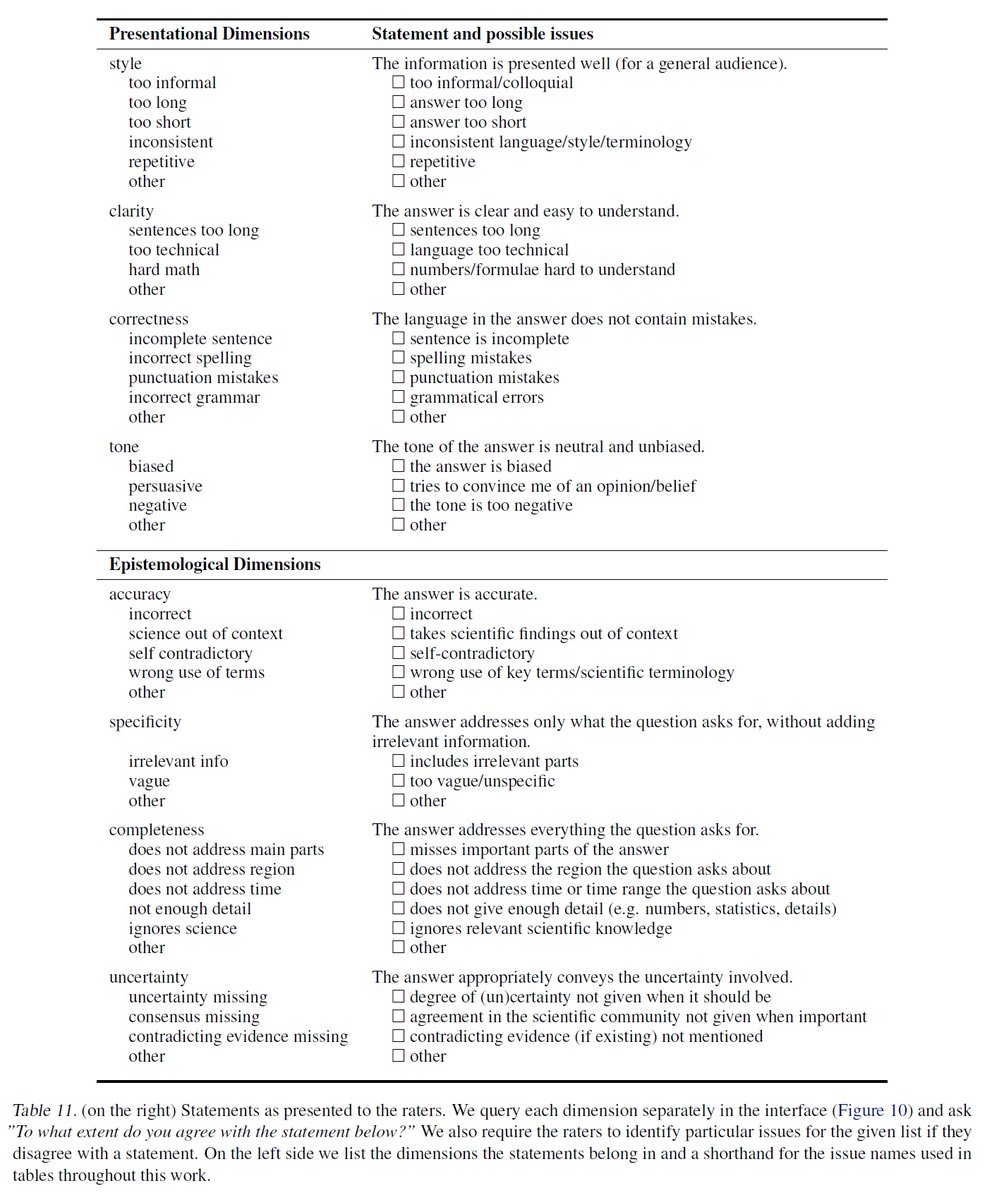

How well do different #LargeLanguageModels perform in portraying #climatechange information❓ Paper w/ colleagues from Google DeepMind & ETH Zürich - accepted for ICML Conference, one of the World's leading #machinelearning conferences Link (open access)➡️openreview.net/forum?id=ScIHQ… Thread⬇️

Our new mechanistic interpretability work "Activation Scaling for Steering and Interpreting Language Models" was accepted into Findings of EMNLP 2024! 🔴🔵 📄arxiv.org/pdf/2410.04962 Kevin Du, Vésteinn Snæbjarnarson, Bob West, Ryan Cotterell and Aaron Schein thread 👇

🚨 Recruiting PhD students to join my team at DTU Visual Computing & Pioneer Centre for AI on 3D Vision! 🇩🇰 🚲🌊🏰 Copenhagen is an emerging hub for computer vision with a thriving community—and it’s an amazing city! 📢 Apply now: efzu.fa.em2.oraclecloud.com/hcmUI/Candidat… & please reach out with any questions!